Scheduled jobs are essential to business continuity. They can be developed in many different languages (including Java, C#, Go, Python, and R), and can be deployed on containers using Docker registries. These containers can then communicate with one another via Kubernetes.

But when working with scheduled jobs, how can you collate, track, and manage the artifacts related to them at all times and use their metadata for analysis and improvements? A universal package manager—also called a universal repository manager—can help.

Here's a step-by-step look at how these tools work and how you can use them with your DevOps build pipelines for containerized, scheduled jobs.

1. Developing scheduled jobs

First, when developing scheduled jobs, you need to manage your build artifacts and their metadata using a package manager that supports various languages and continuous integration (CI) tools such as Jenkins. This is needed to resolve dependencies from the remote repositories and to deploy built artifacts to the local repositories.

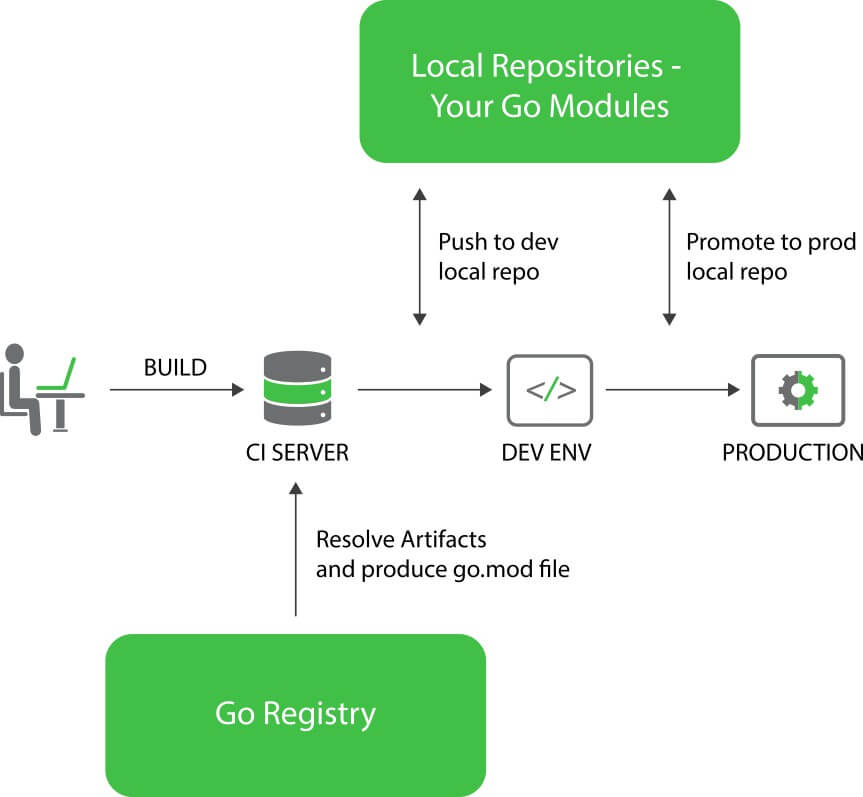

For example, if you are building your scheduled jobs using Golang, you can use a Go Registry for resolving artifacts and deploying the Go packages you build to your local repositories. The go.mod file is modified according to internal project dependencies when a Go module is built and when these Go packages or Go modules are published.

You can also publish a variety of parameters, such as build name and build number, all of which contribute to the artifacts' metadata.

A universal binary repository manager supports similar functionalities for all platforms, including Java (Maven and Gradle builds), C# (NuGet), Python (PyPI and Conda), R (CRAN), Helm, Go, and more.

Build information can be used for a variety of metrics, including:

Measuring the number of builds that were sent to QA/release

Figuring out dependency variations in different builds

Identifying which builds are making your project bulky

Figure 1: Getting Go modules in production with a universal package manager.

2. Containerizing a scheduled job and managing Docker information

Once you have developed a scheduled job, it needs to run in certain time intervals.

Imagine a job that runs every hour and puts a certain load on your production servers, with output and log files that are saved for years. When you have several such jobs running simultaneously, your infrastructure is prone to failures and heavy load errors.

In this new age of containerized applications, you can move toward containerizing scheduled jobs, as long as you have a way to manage the related information. A package manager can provide the Docker images needed to spin up the Docker containers in a continuous integration job. It can also store the information about those Docker images, in a layered format, as Docker artifacts. Thus, you can use a Docker registry or you can perform the tasks of a scheduled job in infrastructure-free Docker proxies.

The great thing is that these Docker registries can be used in a client-native way with a package manager, making them easier to understand. Also, as with every build language, the metadata for the Docker repositories is stored. You can use it for analyses, such as discovering the dynamic container or layer size for each scheduled job run, or comparing two builds to find differences in the artifacts each contains.

3. Running multiple Docker containers in a Kubernetes cluster and using a Helm charts registry

Consider a real-time environment where these scheduled jobs are usually interdependent and you can have varied environments for running each of them. For example, financial organizations usually build these jobs for complex reports that are fairly interdependent and that can have different environmental, programming, or scripting requirements.

In such cases, multiple Docker containers with different operating system or in-memory parameters, each functioning individually, can be clubbed to work together in a Kubernetes cluster.

A package manager in this case can work as a Kubernetes registry to store and manage information on these Kubernetes clusters. You can use Helm charts for modeling the cluster, and can also store and manage them within a package manager using a remote Helm repository.

Once you've deployed these charts, you can view their metadata in the Helm repository. This makes it even easier to manage automation related to the jobs infrastructure, and you have it all in one place for easier access.

Accelerate your DevOps

Developing, managing, and analyzing scheduled jobs becomes much easier when you use containerized clusters with a repository manager. Use one to accelerate the process of developing scheduled jobs the DevOps way.

These tools offer a simple, central solution for artifact maintenance and analysis. And developers and application support engineers can monitor all details using the metadata that's stored and readily available in the repository manager.

Keep learning

Choose the right ESM tool for your needs. Get up to speed with the our Buyer's Guide to Enterprise Service Management Tools

What will the next generation of enterprise service management tools look like? TechBeacon's Guide to Optimizing Enterprise Service Management offers the insights.

Discover more about IT Operations Monitoring with TechBeacon's Guide.

What's the best way to get your robotic process automation project off the ground? Find out how to choose the right tools—and the right project.

Ready to advance up the IT career ladder? TechBeacon's Careers Topic Center provides expert advice you need to prepare for your next move.