The last decade has seen significant improvements in the approach to software quality. Practices such as test-driven development have created a new world, one where a project without automated unit tests tends to be a red flag for most engineers.

Unfortunately, writing beautiful code and reaching for perfect test coverage do not mean much until code is deployed to production and exercised by real-world users. The increased adoption of distributed systems has also raised the operational complexity of production environments, with more places for things to fail, especially in unpredictable ways.

Here is why you need to look beyond testing and IT operations monitoring and how you can instrument your applications to increase their real-time observability and ultimately raise your understanding of what's happening deep down in your production systems.

No system is immune to failure

In production environments, the theory of what's in the code and the reality of the practical world never align perfectly. Failure happens, and it's inevitable. Despite all efforts to write perfect code and extensively test it, the truth is that some things are unpredictable. Your best bet is to embrace this; be ready to understand and mitigate these situations.

There is a significant danger in trying to prevent failure at all costs. It creates the illusion that failure cannot happen, so that when it does occur, you will fail harder and won't be used to handling it.

The importance of being comfortable with failure is exacerbated by the popularity of distributed architecture patterns such as microservices. Despite their strong benefits for developer experience and scalability, their operational complexity requires a much more heightened visibility of their many moving parts.

With distributed systems, you are distributing the places where things might go wrong, and you start facing situations of partial failures that can be harder to detect and understand.

Beyond monitoring: The unknown unknowns

Monitoring is an important part of production visibility. Alerts and metrics provide valuable information about the symptoms of failure—for example, knowing when response times go above a certain threshold or whether the error rate increased after a deployment.

The value of monitoring stops when you need to go beyond these predefined symptoms and metrics, in particular when trying to troubleshoot a situation that you've never encountered before.

Observability helps you overcome this new class of problems: the unknown unknowns, unpredictable events that take place despite your best efforts to build failure-free systems. While monitoring focuses on detecting that something is wrong, observability gives you the tools to ask why and ultimately understand your systems better.

The production visibility spectrum

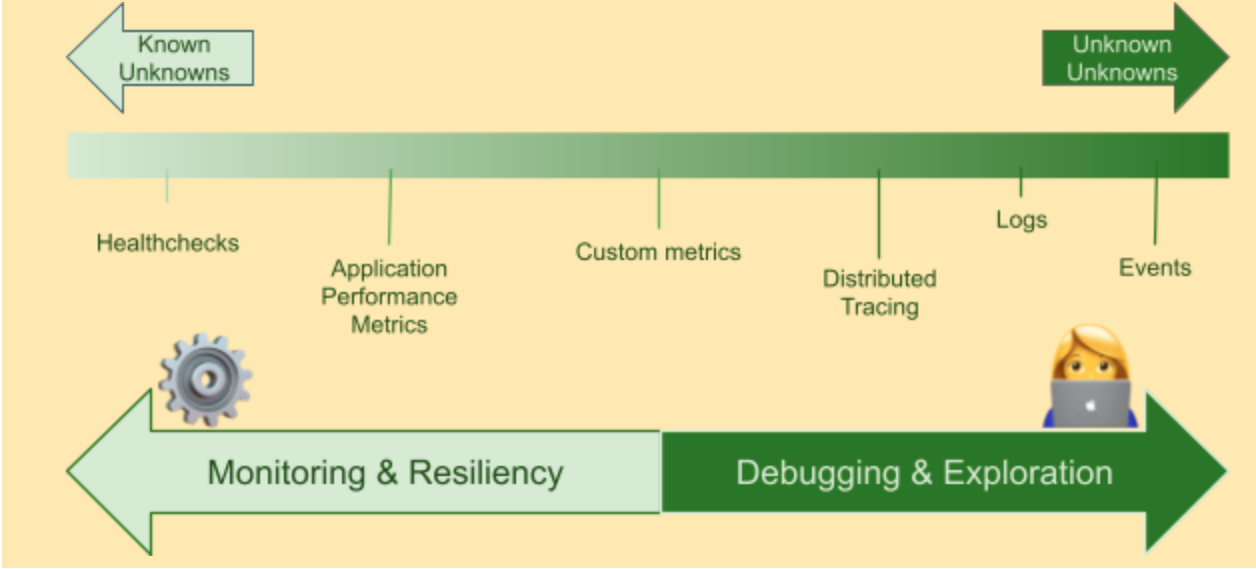

The figure below shows several ways of observing a system, all of which are part of a strategy. These range from automation, monitoring, and robustness, on the left side, to exploration, observability. and debugging, at right.

Figure 1. The spectrum of production visibility tools, from monitoring to observability. Source: Pierre Vincent

On the far left, health checks are very basic signals that track whether a service is up and healthy. These checks are typically reused by automated processes to, for example, trigger the restart of a service that crashed.

Application performance metrics are a limited set of time-series measurements that give a system-wide view of application health: request throughput, error rate, response time, etc.

Once you understand what "normal" looks like for these metrics, you can apply thresholds and trigger automated alerts if they are breached. At this point in the spectrum, you are still in monitoring territory and covering the bare minimum of symptom detection, without enough data to understand the underlying causes.

Luckily, time-series metrics don't stop at showing you system-wide symptoms. You can also use them to troubleshoot issues. Most infrastructure metrics, such as high CPU usage, shouldn't warrant an alert; after all, if you're paying for it, it's great if you're using it. But an abnormal spike correlating with an error rate increase will help an investigation.

Custom application-level metrics are also a great way to steer debugging in the right direction. For example, a sharp increase in the number of new user registrations can be linked to a sudden increase in latency.

More details require more effort to understand

As you move farther to the right on the spectrum, the amount of available data and detail increases, but so does the need for inquisitive thinking to get to the root of a problem.

Truth be told, machines aren't particularly good at troubleshooting unknown unknowns. Humans do a much better job at debugging and at applying past knowledge, intuition, and muscle memory to explore vast amounts of data. Distributed tracing, logs, and events provide this extra depth of context.

Distributed tracing paints a picture of requests traveling through the system, correlating events from a user browsing a mobile app all the way through database queries. Tracing is an excellent tool to surface bottlenecks, circular service dependencies, and performance bugs.

The high volume of logs, output by the many different parts of a distributed application, can be a gold mine of debugging data. It is essential that these logs be centralized and searchable; with hundreds of ephemeral containers or lambda functions, it's no longer an option to SSH into a server and tail log files.

Unfortunately, plaintext logs usually fall short of expectations. Format inconsistencies from different languages or logging frameworks make them almost impossible to index properly, and there is only so much that regular expressions can do with terabytes of logs.

By structuring logs in JSON, for example, it is possible to upgrade them to be indexable, context-rich events emitted from any part of the application, making them the most powerful debugging tool in your observability toolbox.

High-cardinality dimensions are the best context

The context that events carry with them is the result of all the different fields included in the event payload. Some of these fields will be standard, such as "timestamp" or "service_name." The true power comes from fields that carry high-cardinality dimensions (i.e., a very large number of possible values), which can isolate the fringe situations involved in investigating unknown-unknowns.

Here are some examples of high-cardinality fields and their possible uses in debugging:

Commit hash, build number: Identify a change in behavior between different commits/builds.

CustomerID: Can this issue be isolated to a specific customer?

UserID: … or to a specific person?

IP address: … or to a specific network?

In most cases, choosing which contextual fields to add to events depends on the event itself and the service emitting it. A "payment_failed" event will be more insightful with a "payment_provider" field, but that field won't make sense for a "login_successful" event.

Don’t spend all your time testing; use some for instrumenting

The applications your organization builds today include a certain level of complexity. Some of this may come from the intricacies of a given business domain, from the size of the application, or from your technology choices.

By building observable applications, you can embrace this complexity by acknowledging the emergent behaviors that will surface only in production—even if yours is the most mature testing strategy.

Of course, this doesn't mean you shouldn't test; it's just that you shouldn't stop there. Keep some of your time for instrumenting your application with metrics, good logs, and structured, context-rich events. These will be your lifelines when the unpredictable happens.

Keep learning

Choose the right ESM tool for your needs. Get up to speed with the our Buyer's Guide to Enterprise Service Management Tools

What will the next generation of enterprise service management tools look like? TechBeacon's Guide to Optimizing Enterprise Service Management offers the insights.

Discover more about IT Operations Monitoring with TechBeacon's Guide.

What's the best way to get your robotic process automation project off the ground? Find out how to choose the right tools—and the right project.

Ready to advance up the IT career ladder? TechBeacon's Careers Topic Center provides expert advice you need to prepare for your next move.