How far away are we from machines making safer software, faster? We might be closer than you think.

Other than ensuring that your people are happy and engaged, digital innovation is the best source of competitiveness and value creation for almost every type of business. As a result, three things are increasingly common among corporate software engineering teams and the 20 million software developers who work for them:

They seek faster innovation.

They seek improved security.

They utilize a massive volume of open-source libraries.

The universal desire for faster innovation demands efficient reuse of code, which in turn has led to a growing dependence on open-source and third-party software libraries. These artifacts serve as reusable building blocks, which are fed into public repositories where they are freely borrowed by millions of developers in the pursuit of faster innovation.

This is the definition of the modern technology supply chain—and more specifically, a software supply chain.

Organizations that invest in securing the best parts, from the fewest and best suppliers, and keeping those components updated, are widening the gap against their competitors. The best-performing organizations are applying automation to help them manage their open-source component choices and updates.

As these practices evolve, machines will become better at guiding developers to the best-quality and most secure component versions. And in the not-too-distant future, machines may be compiling the best components into application code based on functional requirements defined upfront.

Here's why automation should be a key strategy for helping you select open-source components, and other lessons from my team's research.

Beware of the proxies

The 2019 State of DevOps Report found that elite organizations are deploying 200 times more frequently than their peers, and their change failure rates are seven times lower. They're also much faster in mean time to recover from failure than other organizations.

But these kinds of metrics are really focused on how you're doing internally as a development team and don't take into account many external factors.

This reminds me of what Jeff Bezos said in his 2017 letter to shareholders: "Beware of the proxies." You can get so focused on a process, and doing that process well, that it becomes the thing that you're trying to achieve.

You might be trying to achieve faster deployments, faster mean times to recovery, or more secure code releases. They can represent your proxies for success, while not necessarily contributing to the outcome your business is attempting to achieve.

Who's faster?

Consider adversaries who attack your code. If you can release new security updates in your codebase within two weeks, but your adversaries can find and exploit the new vulnerabilities in two days, your organization's data is at risk.

In this situation, it does not matter as much that you've already reduced your time to implement security updates fivefold if your adversaries are still faster.

Consider this real-world scenario. On Wednesday, April 29, 2020, the creators and maintainers of SaltStack, an open-source application, announced that the app had a critical vulnerability. On the very same day, they released the safe version of the application. If you had automatic updates turned on from SaltStack, you got the newer version. If you didn't, then you needed to get the newer version, update your infrastructure, and do so before the adversaries found it.

One of the researchers at F-Secure said that "the vulnerability was so critical, this was a patch-by-Friday-or-be-breached-by-Monday kind of situation."

And that's exactly what happened. By Saturday morning, May 2, some 18 people on GitHub reported that breaches were actively happening. They had lost control of their servers. SaltStack had been taken over, rogue code was executing on their systems, and their firewalls were being disabled. Throughout May, 27 breaches were recorded.

Accelerate development

But not all of the news is bad: We know that developers are getting faster, too, because they're not writing all of their code themselves.

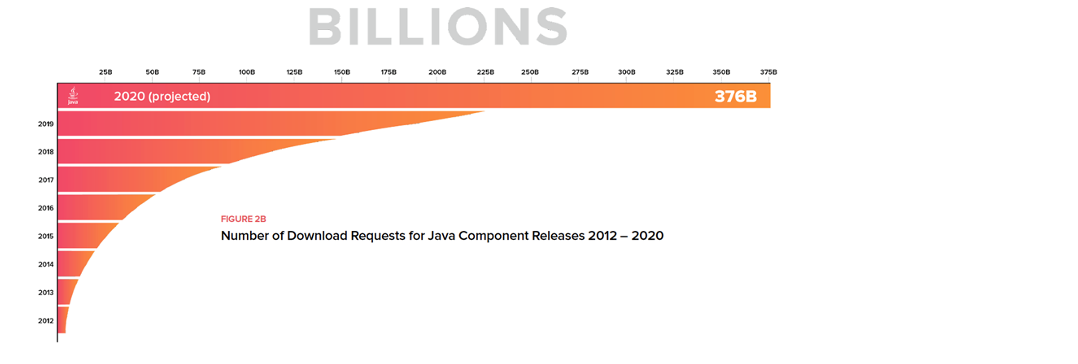

Figure 1: Number of download requests for Java component releases, 2012 to 2020, from the Central Repository. Source: 2020 State of the Software Supply Chain Report

We're assembling more and more code from open-source components and packages. As one example, it's amazing to look at download volumes for the npm package manager. There were 95 billion npm package downloads in July 2020. If you annualize that download volume, we would see over 1.1 trillion npm package downloads this year.

In Java, similar things are happening. In 2019, Maven Central had 226 billion download requests. In 2020, download request volumes are expected to hit 376 billion.

How do these monstrous numbers translate to your own developers and applications? After analyzing 1,500 unique applications, we can see that 90% of their code footprint is built from open-source software components.

Build the best code

As I started thinking about all of the above, I wanted to understand not just how these parts are being used, but where they are coming from and who the open-source software suppliers are. So, in a two-year-long collaboration, Gene Kim, Stephen Magill, and I examined software release patterns and cybersecurity hygiene practices across 30,000 commercial development teams and open-source projects.

We set out to understand what attributes we could use to identify the best open-source project performance and practices. If development teams were going to assemble applications from these building blocks, we wanted to understand who the best suppliers were.

We wanted to know who released most often, who were the most popular suppliers, who prioritized features over security or security over features, who enlisted automated build tools, which projects were consistently well staffed, and more. All of these variables played a role in identifying suppliers with the best track records, because they would be the ones to help developers build the best applications.

Additionally, the more you could teach machines to identify the attributes of the best open-source software suppliers for developers, the faster development could become.

Lessons from our open-source projects

The top-performing projects released 1.5 times more frequently than the rest of the teams we studied, were 2.5 times more popular by download count, had 1.4 times larger development teams, and managed 2.9 times fewer dependencies.

We also saw a strong correlation between open source projects that updated dependencies more frequently and their ability to maintain more secure code. High-performing projects demonstrated a median time to update (MTTU) their dependencies that was 530 times faster than other projects. By moving to the latest dependencies, they purposely or consequently remediated known vulnerabilities discovered in older dependencies.

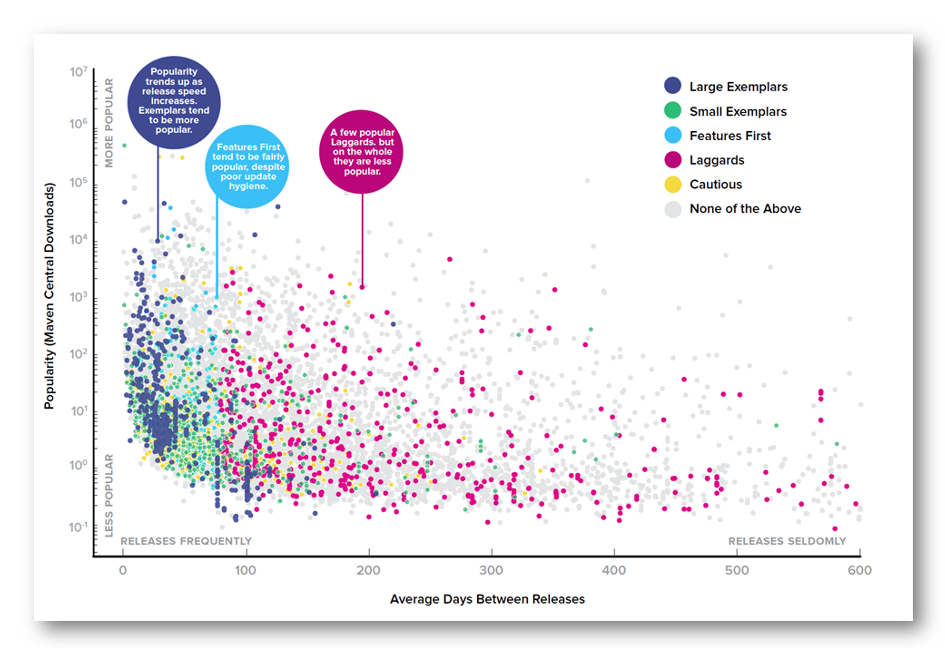

Figure 2: Open-source project cluster analysis of popularity and release speed. Source: 2019 State of the Software Supply Chain Report

To better understand all this, we performed a cluster analysis of these different open-source projects based on several attributes. We were able to see what development teams should focus on when choosing components.

Choosing open-source projects should be considered an important strategic decision for enterprise software development organizations. Different components demonstrate healthy or poor performance that affects the overall quality of their releases.

Therefore, MTTU should be an important metric when deciding which components to use within your software supply chains. Rapid MTTU is associated with lower security risk, and it's accessible from public sources.

Update your open-source components regularly

Just as traditional manufacturing supply chains intentionally select parts from approved suppliers and rely upon formalized procurement practices, enterprise development teams should adopt similar criteria for their selection of open-source software components.

This practice ensures that the highest-quality parts are selected from the best and fewest suppliers. Implementing selection criteria and updated practices will not only improve code quality, but can accelerate mean time to repair when suppliers discover new defects or vulnerabilities.

Ideally, dependencies should be updated—simply, safely, and painlessly, and as part of the routine development process. But reality shows that this ideal is rarely met.

An astonishing story of how far an organization can stray from ideal update practices comes from Eileen M. Uchitelle, staff engineer at GitHub, who said it took seven years to successfully migrate GitHub from a forked version of Rails 2 to Rails 5.32.

Even with new tools available to developers that automatically create pull requests with updated dependencies, changes in APIs and potential breakage can still hold back many developers from updating. We suspect this change-induced breakage is a primary driver of poor updating practices.

A tale of three open source component releases

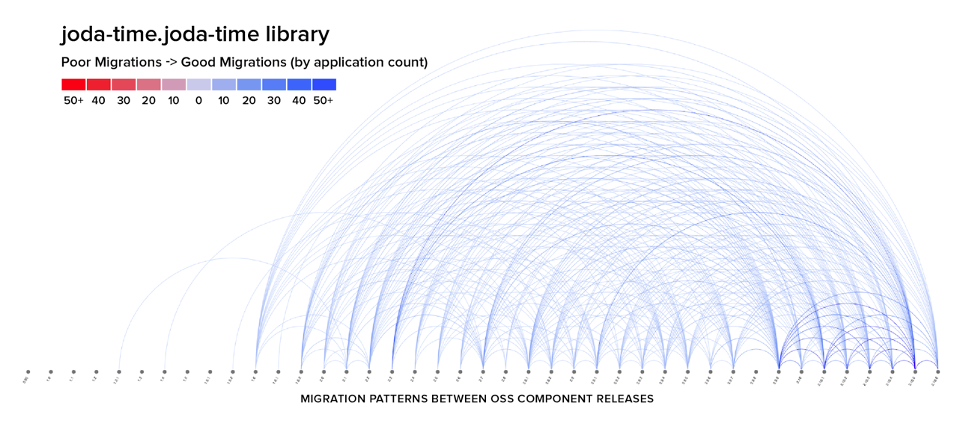

Taking a deeper dive into the vast data available to us from the Central Repository, the world's largest collection of open-source components, you can better visualize open-source project releases and their adoption by enterprise application development teams that migrate from one version to a newer one. We believe this data shows how open-source component selection can play a major role in allowing for easier and more frequent updates.

Figure 3: Migration patterns between component releases for the joda-time library. Source: 2020 State of the Software Supply Chain Report

Consider the widely used joda-time library, which shows that developers using this open-source component update fairly uniformly between all pairs of versions. This suggests that updates are easy, presenting a seemingly homogeneous set of versions to migrate to and from.

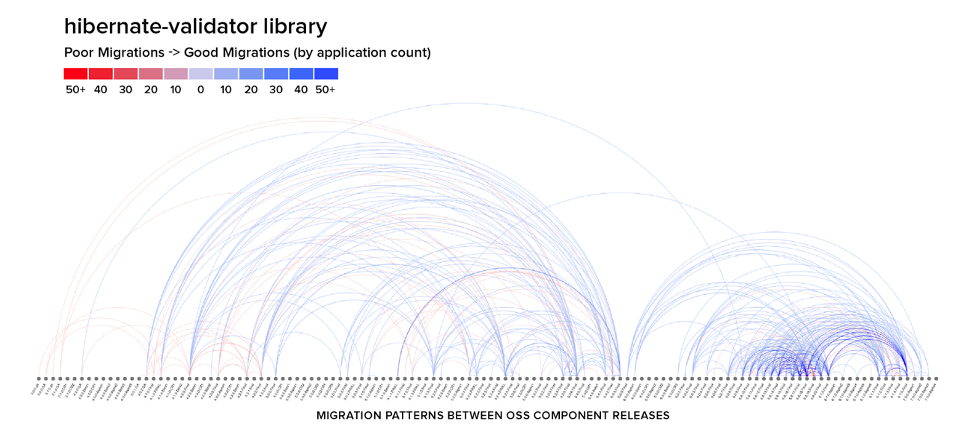

Figure 4: Migration patterns between component releases for the hibernate-validator library. Source: 2020 State of the Software Supply Chain Report

On the opposite extreme, consider the graph for the hibernate-validator library, where there are two sets of communities using it—one favoring version 5 and another preferring version 6. The two communities very rarely intersect. This suggests either that updating to version 6 from version 5 is too difficult or that the value is not worth the effort.

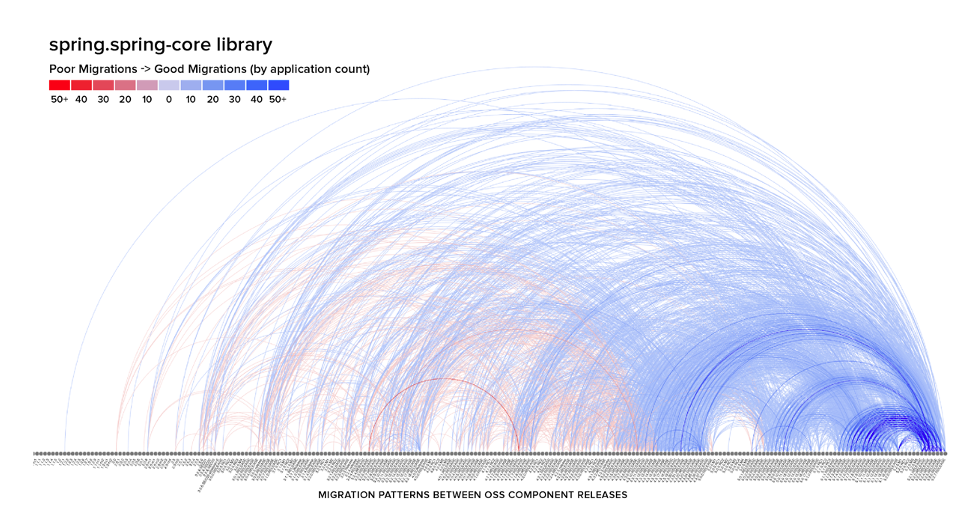

Figure 5: Migration patterns between component releases for the spring-core library. Source: 2020 State of the Software Supply Chain Report

Finally, we take a look at the pattern for spring-core, which suggests that updating is sufficiently difficult that the effort must be planned and some version ranges end up being avoided.

Will machines make software?

If you are a developer, don't worry; your job is secure. No machine out there will take your place. Having said that, an increased reliance on automation to help you select better, higher-quality, and more secure components can serve you and your teams well today.

You can use automation, through advanced software composition analysis and open-source-governance tools, to point to better suppliers with a better track record—for instance, they release often, update vulnerabilities quickly, are well staffed, and are popular.

Using these tools to set policies around components can help you determine when to upgrade your dependencies, and they can quickly inform you of newly discovered vulnerabilities in need of remediation. Additionally, these tools can lead developers to the best versions of components, indicating which newer versions will introduce the fewest breaking changes or introduce troublesome dependencies.

To learn more about our research into high-performance, open-souce component-based development, read the 2020 State of the Software Supply Chain Report or attend my upcoming session on this topic at the DevOps World virtual conference, which runs from September 22-24, 2020.

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.