We are firmly in the age of machine learning. If you're not including these techniques in your coding practices, it's time to catch up.

Cars are on the cusp of driving themselves, not because software engineers taught them how to drive, but because they taught them how to learn. Your credit card company is adept at catching fraudulent charges because it has trained algorithms to recognize what such charges look like. Your bank can automatically process a picture of a handwritten check because of character recognition models inspired by the structure of the brain.

All around us, machines are learning, aided by software engineers who have graduated from controlling them to teaching them how to control themselves. The benefits of machine learning have been felt in nearly every industry, and in some industries, it is now hard to imagine how we ever did without it. Yet, software engineers have been generally slow to apply the benefits of machine learning to their own work. As the saying goes, the cobbler's children have no shoes.

If you haven't yet worked with machine learning, or you just want to expand your understanding of how you can apply machine learning to software development, then read on. This article is the first in a series that will show how you, a software engineer, can apply data science and machine learning techniques to the practice of your craft.

I start here with a practical example: How to use the classic market basket analysis technique to make your code check-in process more robust.

The problem: Missed changes in source code

Most applications have source code files that are somehow coupled together, such that a change in one should be accompanied by a change in the other. For example, a change in the signature of a class method defined in one source code file will likely be accompanied by changes in other files where that method is called.

Similarly, a change in the definition of an interface or abstract class will probably require a change in its concrete implementations. The coupling between some of these files will be fairly obvious, to the point where disconnects can be caught by the compiler.

However, other changes may be harder to catch, especially if your application has a lot of technical debt. The compiler alone won't flag duplicate code that needs to be kept in sync across multiple different places.

It would be desirable if your source code management system had some hook that fired upon check-in, and looked for potentially missing files—files not present in the check-in and which are usually changed at the same time as other files in the check-in. If any such files were found, you could receive an email or instant message about the potential oversight.

The solution: Market basket analysis

The problem of connections between different files in your source code is similar to the well-established technique of market basket analysis, also called affinity analysis, which retailers use to understand which items are frequently purchased together. For example, if you were analyzing supermarket data, you might discover that a certain type of snack is frequently purchased with a certain type of soda, and so you might try to place these close together on the shelf in hopes of increasing sales of both.

Although such techniques are frequently applied in a retail setting, the core idea of discovering connections between elements in a set of transactions can be applied to many domains. Essentially, such analysis aims to identify a set of association rules between items that allow us to say how much more likely it is that item A will appear if item B is already present.

If you think of your source code check-in as a sales transaction, and the individual files as products, you can easily apply market basket analysis methods to software changes.

Fundamentals of association rules

Association rules form the basis of market basket analysis. But to fully understand those rules, you need to define a few fundamental concepts.

Support

Support is the percent of the time that an item, a set of items, or an association rule appears in the data. Support is defined as:

Support(X) = Count (X) / N

where X is the rule or set of items, and N is the total number of transactions. For example, a file that appeared five times in 100 check-ins would have a support of 0.05.

Confidence

Any association rules for your files data will look like this:

File123 -> File456

That reads as "if File123 appears, File456 will also appear." Our confidence in a rule tells us how often the items on the right-hand side will appear, given that the items on the left-hand side have appeared. Confidence is defined as:

Confidence(X->Y) = Support (X,Y) / Support(X)

More concretely, imagine that you have the following item sets from a hardware store cash register, in which each item set represents the contents of a single receipt.

- {hammer, nails}

- {hammer, nails, rope}

- {nails, ladder}

- {ladder, rope}

- {screwdriver, hammer}

What is our confidence that a receipt containing a hammer will also contain nails?

Confidence(hammer->nails) = Support (hammer, nails) / Support(hammer)

Hammer appears in 3 out of 5 receipts (60 percent), while the combination of hammer and nails appears in 2 out of 5 receipts (40 percent), so:

Confidence(hammer->nails) = .40/.60 = 0.667

That is, if hammer appears on the receipt, nails will also appear 2/3 (67%) of the time.

Lift

Confidence tells us something about the strength of a rule, but not everything we need to know. If nails appeared in 95% of transactions, then even a perfect confidence score on the hammer->nails rule would not be very informative.

Lift tells us how much more likely an item is to be present if another item is present. We compare the confidence of the association between the left-hand side and the right-hand side to the rate at which the right-hand side occurs, irrespective of any other items (its support).

Lift(X->Y) = Confidence(X->Y) / Support(Y)

The larger the lift of an association rule, the stronger the association. Returning to our hammer and nails example:

Lift(hammer->nails) = Confidence(hammer->nails) / Support(nails)

Lift(hammer->nails) = .667 / .60 = 1.11

This means that nails are 1.11 times more likely to be present when a hammer is present—a small, but positive lift. Lifts of greater than one suggest that the incidence of the right-hand side is increased by the left-hand side, while lifts of less than one suggest that the incidence of the right-hand side is suppressed by the left-hand side.

I'll now move to an extended example of acquiring, cleaning, and analyzing realistic check-in data. At the end of this example, I'll return to the concept of lift to draw some conclusions.

Methods and tools

Below is an example of discovering the association rules in a set of source code changes, based on real, publicly available data from the Apache Tomcat application server project. That data set is derived from check-ins for bug fixes, and so is not completely representative of all Tomcat check-ins, but it is easily obtainable and is sufficient to demonstrate the relevant techniques.

The example is written in R, a statistical computing language commonly used for data science, and I recommend installing the R Studio integrated development environment (IDE) to make your explorations with R easier.

For the sake of brevity, this tutorial will assume that you have both of these already installed, and that you have explored them enough to be able to start running the code samples. The simplest way to get started is to simply paste the code into Source pane (top left of R Studio), and use the accompanying "Run" button to execute it one line at a time.

Because of the variety of source code management systems and continuous integration methods in common use, I won't be showing the complete integration of association rules into a working development pipeline. Instead, I will demonstrate only the steps needed to discover the association rules and to output these to a file.

Incorporating those rules into your specific development process and tool chain will have to be done in context. In general, popular source code management systems, such as Git and SVN, support "hooks" that allow code to run upon check-in. That code examines the content of the check-in and uses the association rules to determine whether or not the responsible developer needs to be alerted to potentially missed changes.

Below I will walk though the code one piece at a time. Finally, I will provide all of the code at once for ease of copying. You can run all of the code as a single R script.

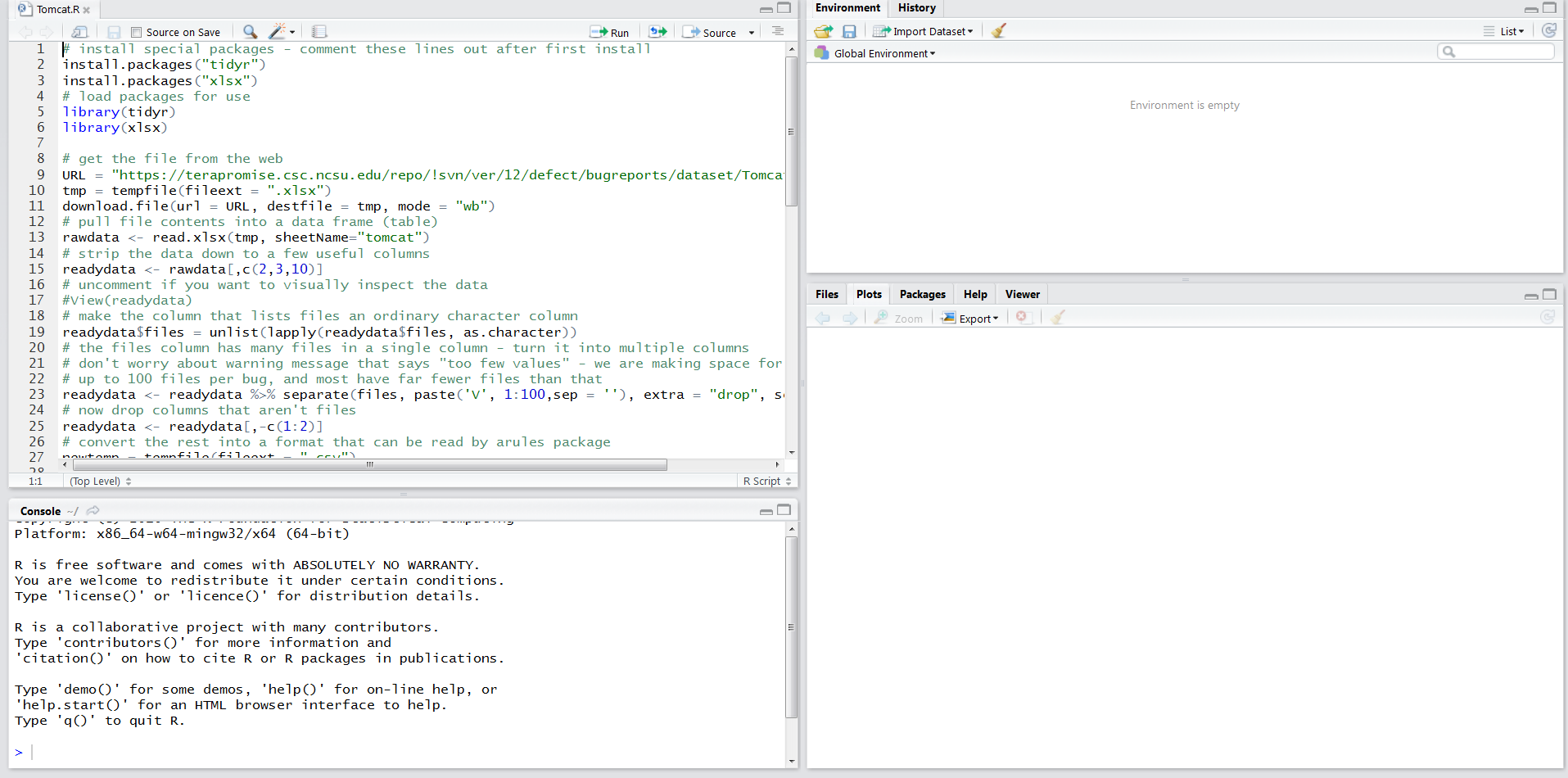

The initial R set up

Before you can start the meat of your work, you need to acquire a couple of R packages that are not available by default. You can install these from the Comprehensive R Archive Network (CRAN).

Use the code below to download and install these packages. After you have run it once, you may want to comment it out, as it need not run again. Lines preceded by a hash (#) are comments in R.

# install special packages - comment these lines out after first install

install.packages("tidyr")

install.packages("xlsx")

TidyR is a package that makes it easy to format data in specific ways, and Xlsx will help you read data from Excel files. (The Apache bug fix data you need is provided in Excel files.)

Once you have installed TidyR and Xlsx, make these available to the rest of your code using the library() function, which is similar to using or import statements in other languages.

# load packages for use

library(tidyr)

library(xlsx)

Getting the data

You are now ready to download the Apache bug fix data from Terapromise, which is a great source for freely available data sets about software engineering.

# get the file from the web

URL <- "https://terapromise.csc.ncsu.edu/repo/!svn/ver/12/defect/bugreports/dataset/Tomcat.xlsx"

tmp <- tempfile(fileext = ".xlsx")

download.file(url = URL, destfile = tmp, mode = "wb")

Next set up two variables, the URL at which the data is located, and a temporary file with a ".xlsx" extension. Then, download the data to that temporary file using the "wb" (write binary) mode. Notice that the assignment operator for variables is an arrow. You can also use an equals sign for variable assignment in R, but use of the arrow operator is more idiomatic, at least in some circles.

Transforming the data

You have your data in an Excel spreadsheet, but that is not the format expected by the package you will use to build the association rules, so you'll need to transform it. To do this, first pull the data into a data frame, which is essentially an in-memory table. When you are working in R, you will often want to pull your data into a frame for ease of analysis and manipulation. Notice that the Xlsx library you are using requires that you specify the name of the specific sheet within the Excel file that you want to read.

# pull file contents into a data frame (table)

rawdata <- read.xlsx(tmp, sheetName="tomcat", stringsAsFactors=FALSE)

A factor in R is basically a category variable—a value that we expect to be repeated across multiple rows, much as the values "Private" and "Corporal" would repeatedly show up in a "Rank" column for a table of US Army soldiers. The read.xlsx() function treats all strings as factors by default, and so you use the stringsAsFactors argument to tell R that the values in files and other columns are not repeating categories.

To see the contents of the rawdata frame displayed nicely in RStudio, use the View() command.

# visually inspect the raw data

View(rawdata)

Notice that there are several columns here, and you won't be needing all of them. You're mainly interested in the files column., which contains data that looks like this:

java/org/apache/jasper/compiler/PageInfo.java java/org/apache/jasper/compiler/Validator.java

The association rules package will expect each individual file (each item in a transaction) to be in a separate column, and so we'll need to modify the data to make that happen. First, however, let's get rid of the data we don't need, leaving only the files column and a couple of other columns for context.

# strip the data down to a few useful columns

readydata <- rawdata[,c(2,3,10)]

# visually inspect the changed data

View(readydata)

An R data frame can be indexed positionally using a [rows,columns] convention. If I wanted to see the value for the third column of the fourth row, I could write rawdata[4,3] to indicate just that single cell. I can also give ranges or lists to both the rows and columns arguments. If I don't supply a specification for either the rows argument or the column argument, it will default to returning all available. So, when I ask R for rawdata[,c(2,3,10)], it means that I want all rows (since I left that first argument blank) and a specific set of columns.

The c() function stands for combine, and allows me to combine 2, 3, and 10 into a list of a columns that I would like returned. In a nutshell, I am asking R to take the second, third, and tenth columns of rawdata, and drop these into a new frame called readydata. When you view this new frame, you will see the files column, along with a couple of other columns that describe the check-in.

Now you need to rearrange the contents of the files column so that each file name is in a separate column, because that is the way your soon-to-be used arules (association rules) package expects the data to be organized. You'll use a function from the TidyR package called separate() to break this column into multiple columns.

The files column currently has a space in between each file, so use the space as your separator (sep). The paste() command will help you create 100 separate columns, "V 1" through "V 100," to hold the newly separated file names. For some check-ins, you'll only have one or two files, but others will have over 90 files, and so you need to make enough space for all cases.

The scary looking %>% is a pipe operator that essentially means "pass the value of the expression on the left forward into the expression on the right." After all this processing is done, you overwrite the old version of readydata with the new version.

# the files column has many files in a single column

# turn it into multiple columns

# don't worry about warning message that says "too few values"

# we are making space for up to 100 files per bug

# most have far fewer files than that

readydata <- readydata %>% separate(files, paste('V', 1:100), sep = " ")

Finally, drop all columns except those that list files . You do this by making a collection of column numbers that represents the first and second columns, and then subtracting these columns from the readydata frame. Overwrite the old version of readydata with this newly slimmed down version, and then write it out to a CSV (comma-separated values) file, so that the arules package can read it.

# now drop columns that aren't files

readydata <- readydata[,-c(1:2)]

# convert the rest into a format that can be read by arules package

newtemp <- tempfile(fileext = ".csv")

write.csv(readydata,file = newtemp)

Building association rules

Now that all our preparation is done, it's time to start discovering association rules with the arules package. First, install the package and make it available for use with the library() function.

# install association rules package

# comment below line out after first install

install.packages("arules")

library(arules)

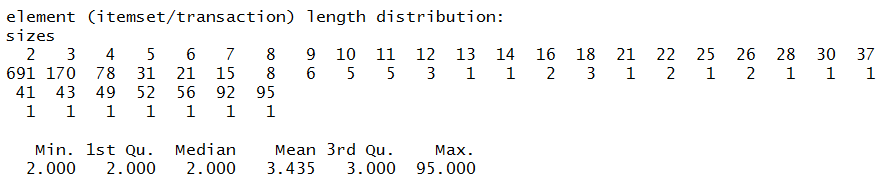

Next, read in the transactions created earlier. Skip the first line, which contains only column headers, and specify a comma as the separator since you're reading a CSV file. Then, ask for a summary of the transactions you read.

# read transactions - skip the first line of header info

checkins <- read.transactions(newtemp, sep = ",", skip = 1)

summary(checkins)

Notice that summary() produces several interesting statistics. For example, most of the transactions list only two files, but one transaction has 95.

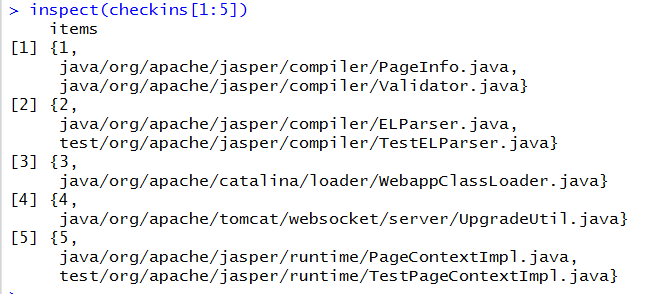

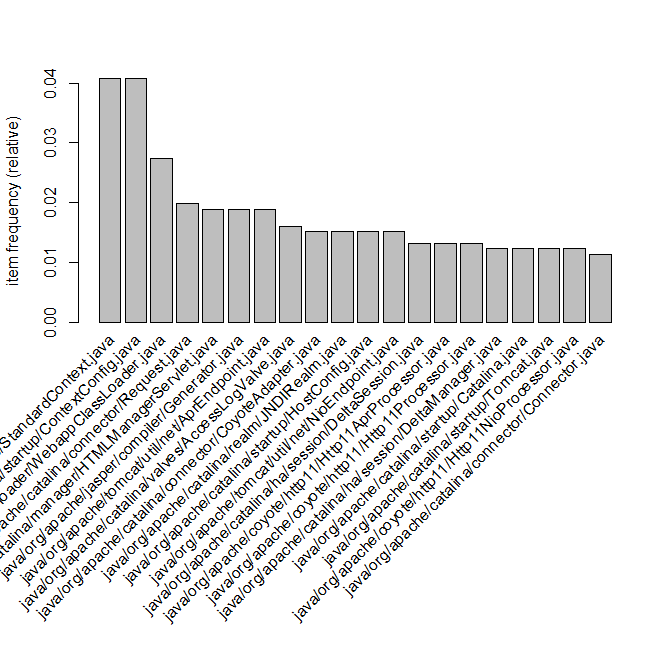

Look at a few sample transactions, such as the first five, to make sure that the data came over correctly. You can also run an item frequency plot to see the top 20 most commonly occurring files.

# look at the first five check-ins

inspect(checkins[1:5])

# plot the frequency of items

windows()

itemFrequencyPlot(checkins, topN = 20)

The java/org/apache/catalina/core/StandardContext.java file is most common, appearing in roughly 4% of transactions (a support of 0.04).

Now, you start to learn association rules from the data using a function called apriori(). It's so named so because it uses logical rules (rules that are known a priori, or before experience) to cut down on the amount of processing it has to do. For example, a file that doesn't appear frequently in the transaction set can be ignored because an infrequent item can't be the basis for a frequently occurring rule or combination of rules. So, if a file has low support (it appears infrequently), apriori() won't bother to try to look for rules relevant to that file.

# training a model on the data

# default settings result in zero rules learned

apriori(checkins)

The first time you run apriori() with its default settings, it won't generate any rules because none of the rules in your transaction set will meet its default criteria. For example, apriori() defaults to reporting rules that have have a support of 0.1 or higher (i.e., are relevant to 10% or more of the transactions), and due to the number of separate files in the check-in data set, you have no rules that are relevant to a tenth of the check-ins.

As you saw above, our most commonly appearing file: java/org/apache/catalina/core/StandardContext.java appears in less than 5% of check-ins. So, you'll need to tune the parameters of apriori() a bit. Start by finding the rules that appear in at least five of the 1000+ check-ins, and that have at least a 50% confidence.

# set support and confidence levels

filerules <- apriori(checkins, parameter = list(

support = 0.005, confidence = 0.50))

Now you can inspect these rules, find the ones with the most lift, and search for rules related to a specific file.

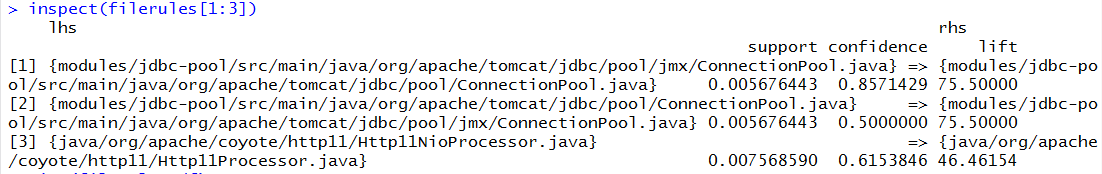

# look at the first three rules

inspect(filerules[1:3])

The formatting of the output in R Studio is a bit ugly, but you'll see that the files ending in /pool/ConnectionPool.java and /pool/jmx/ConnectionPool.java are closely coupled, and the presence of one in a check-in makes it 75 times more likely than the average check-in that you will see the other.

This is a strong lift. At the same time, you see that these two files are not always paired together. If a check-in contains /pool/jmx/ConnectionPool.java, you will also see /pool/ConnectionPool.java 85% of the time (a high confidence). However, if a check-in contains /pool/ConnectionPool.java, we will see /pool/jmx/ConnectionPool.java only 50% of the time — a meaningful,but lesser confidence.

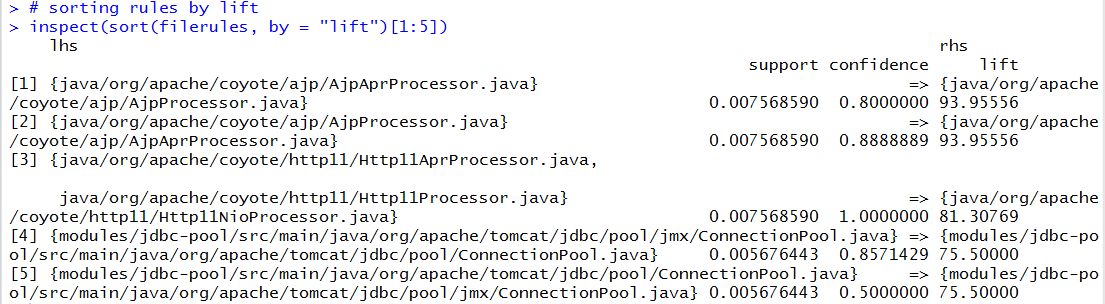

Which rules give us the most lift?

# sorting rules by lift

inspect(sort(filerules, by = "lift")[1:5])

It seems as though the AjpProcessor.java file and the AjpAprProcessor.java file are closely linked with both high lifts (the presence of one makes the presence of the other 93 times more likely than average) and high confidences (if one shows up, the other will show up 80% or more of the time). If you check in one of these files without the other, you will want to double-check you haven't missed something.

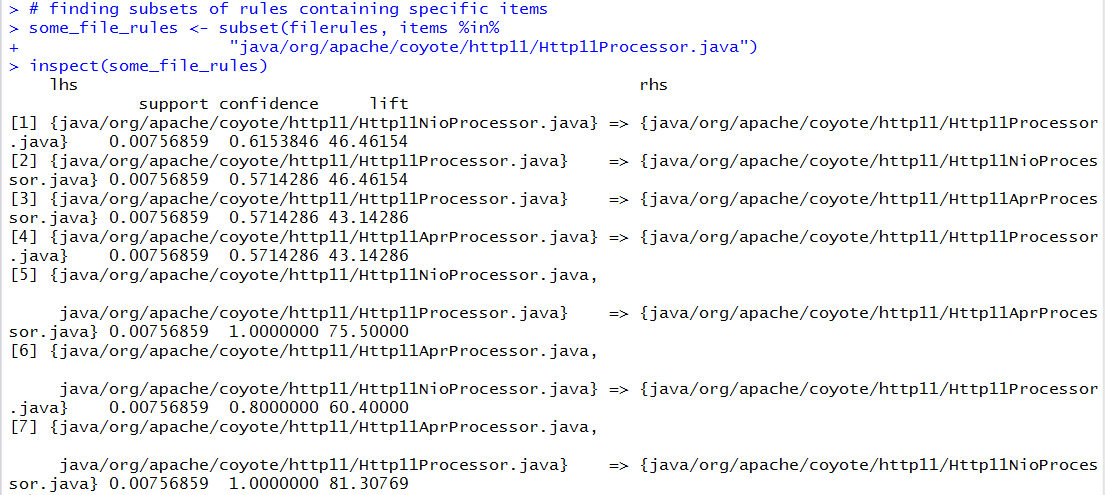

Can you find important rules specific to a particular item? (This is the sort of question you should ask upon check-in with respect to the specific files in that check-in.)

# finding subsets of rules containing specific items

some_file_rules <- subset(filerules, items %in%

"java/org/apache/coyote/http11/Http11Processor.java")

inspect(some_file_rules)

If I check in the Http11Processor.java file, I want to be mindful of whether I should also change the Http11AprProcessor.java and Http11NioProcessor.java files as well.

Finally, if I am trying to integrate the use of these rules into my source control system so that they can be consulted automatically upon check-in, I may want to implement those hooks in another language. So, I'll dump my rules out to commonly readable format that can be used in any language, such a CSV file.

# writing the rules to a CSV file

write(filerules, file = "filerules.csv",

sep = ",", quote = TRUE, row.names = FALSE)

Recap: Here's the full code

Here's the full, combined code from all of the steps above, all in one place:

# install special packages - comment these lines out after first install

install.packages("tidyr")

install.packages("xlsx")

# load packages for use

library(tidyr)

library(xlsx)

# get the file from the web

URL <- "https://terapromise.csc.ncsu.edu/repo/!svn/ver/12/defect/bugreports/dataset/Tomcat.xlsx"

tmp <- tempfile(fileext = ".xlsx")

download.file(url = URL, destfile = tmp, mode = "wb")

# pull file contents into a data frame (table)

rawdata <- read.xlsx(tmp, sheetName="tomcat", stringsAsFactors=FALSE)

# visually inspect the raw data

View(rawdata)

# strip the data down to a few useful columns

readydata <- rawdata[,c(2,3,10)]

# visually inspect the changed data

View(readydata)

# the files column has many files in a single column

# turn it into multiple columns

# don't worry about warning message that says "too few values"

# we are making space for up to 100 files per bug

# most have far fewer files than that

readydata <- readydata %>% separate(files, paste('V', 1:100), sep = " ")

# now drop columns that aren't files

readydata <- readydata[,-c(1:2)]

# visually inspect the changed data

View(readydata)

# convert the rest into a format that can be read by arules package

newtemp <- tempfile(fileext = ".csv")

write.csv(readydata,file = newtemp)

# install association rules package

# comment below line out after first install

install.packages("arules")

library(arules)

# read transactions - skip the first line of header info

checkins <- read.transactions(newtemp, sep = ",", skip = 1)

summary(checkins)

# look at the first five check-ins

inspect(checkins[1:5])

# plot the frequency of items

windows()

itemFrequencyPlot(checkins, topN = 20)

# training a model on the data

# default settings result in zero rules learned

apriori(

# set support and confidence levels

filerules <- apriori(checkins, parameter = list(

support = 0.005, confidence = 0.50))

# look at the first three rules

inspect(filerules[1:3])

# sorting rules by lift

inspect(sort(filerules, by = "lift")[1:5])

# finding subsets of rules containing specific items

some_file_rules <- subset(filerules, items %in%

"java/org/apache/coyote/http11/Http11Processor.java")

inspect(some_file_rules)

# writing the rules to a CSV file

write(filerules, file = "filerules.csv",

sep = ",", quote = TRUE, row.names = FALSE)

Next step: Apply what you learned to your own check-in process

Now you understand association rule learning, and how you can apply market basket analysis techniques to source code control in order to identify potentially missed changes.

You used the arules association rule package to learn about interesting connections between files in your code base, and to understand that you can use information to catch cases in which the developer forgot to change or check in a file.

Could you have done this analysis without using R, or any special statistical computing environment? Certainly. You could have pulled your check-in data into a database and used SQL or another language to implement assessments of support, confidence, and lift. But that would have meant creating more code and expending more effort, at least on the analysis side.

In my next installment, I'll demonstrate how you can apply other machine learning techniques to the creation of software. In the meantime, if there's something you didn't understand, or if you have a comment, share your thoughts below.

Image credit: Flickr

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.