Enterprises are increasingly running applications in cloud-native environments using containers, along with orchestration tools such as Kubernetes, to facilitate scalability and resilience. If your organization falls into this category (and the chances are good that it does today, or plans to soon), you must make securing the deployment a top priority.

If you're uncertain about your current security posture, begin by answering these five key questions that address security requirements across the entire container lifecycle, from the point where you're building container images to where they are running live.

This is far from an exhaustive list, but if you can answer yes to each of these questions, you are off to a good start.

1. Are you running your builds separately from your production cluster?

You don't want to run builds in your production cluster, because if your build processes are on the same machine as your application workloads, they're sharing a kernel. If hackers were to compromise the build process, they could potentially get to the host, gaining access to those running applications.

Bear in mind that the RUN instructions in a Dockerfile can run any arbitrary code, so if hackers can modify the Dockerfile, they could get the build to do virtually anything they want it to do. This is even more serious if you are using a Docker build process that uses the Docker socket, because that is essentially root access to the host.

Of course, there is an exception to every rule, so perhaps you have reasons that justify running builds in your production environment. If you know what you're doing, it can be done relatively safely.

For example, you could schedule your build jobs to run on specific nodes in the cluster where applications workloads are not executed. Or you could use rootless build processes to limit exposure. However, unless you have reasons why you really want to do this, the simplest way to avoid builds compromising your production cluster is to keep them entirely separate.

2. Is executable code added to a container image only at build time?

Once you build your application container image, it's a good idea to scan it for known vulnerabilities in the packages or language dependencies inside that container image. There are several free and open-source tools, as well as commercial options, available for vulnerability scanning.

By using those, you'd know if your container images have known vulnerabilities that attackers could exploit. You should put checks in place to avoid deploying images with serious vulnerabilities.

Your containers are instantiated from those container images. Once a container is running, it's possible to change the contents, for example by using package managers to add or update packages, or by transferring code with tools such as wget, curl, or FTP. But if you modify the container to include code that is not part of the build, that code is not scanned for vulnerabilities, so you are at risk.

The best practice is to treat containers as immutable by ensuring that you run only code in your containers that was present when you built the image and that the image you scanned is exactly what the container is running.

Some security tools can enforce this through controls known as "drift prevention," but even if you're not using such a tool, you can still educate your team about the risks and require immutable images in your organization.

However frequently you need to change the code in your containers, the best practice is to rebuild your container image and redeploy with the updated code.

3. Are you avoiding --privileged?

When you run your containers, they are defined by the container image, then configured with flags, maybe Docker parameters, or the pod spec YAML in Kubernetes.

At all costs, avoid using the --privileged flag. Some people call this the most dangerous flag in computing. That point may be debatable, but let's examine why --privileged is a top candidate for that title.

Before we examine what's happening when you run a container with --privileged, here's a quick primer on Linux capabilities.

At one time, you were either a root user with all privileges, or you were an unprivileged user with no special permissions. Capabilities were added to the Linux kernel to make these permissions more granular, so that users could be granted or denied specific privileges.

Today, running a container by default provides it with a sensible set of these capabilities. For example, containers are unlikely to have any reason to modify the kernel modules or change the system time, so the default capabilities exclude the permissions to do these and other things that containers almost certainly don't need to do.

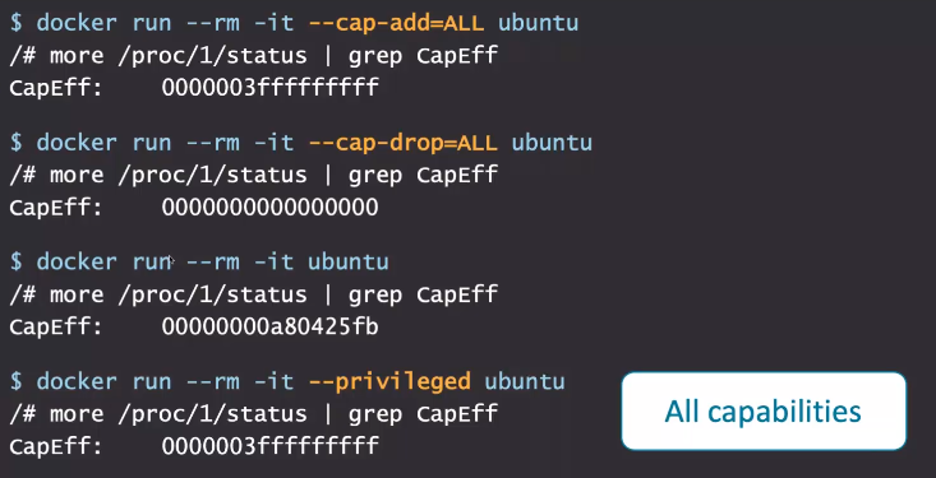

When you run a container, you can specify the set of capabilities that are granted to it. Consider the examples below of running a regular Ubuntu container and checking what capabilities it has (see Figure 1, below).

Looking at the first example, where we give the container "ALL" capabilities, you see a value with lots and lots of "f's" that represent a set of bit flags that are all turned on.

Next, we run the same container and drop all the capabilities. Notice how all the flags are turned off, and the values are zero.

And third, if we don't specify any capabilities at all, we get a default set that is a mixture of some flags off, some on.

As you can see in the fourth example, running with --privileged grants all the capabilities, as if we had specified –-cap-add=ALL. But that isn't the end of the story.

Figure 1: Checking to see what capabilities a regular Ubuntu container has.

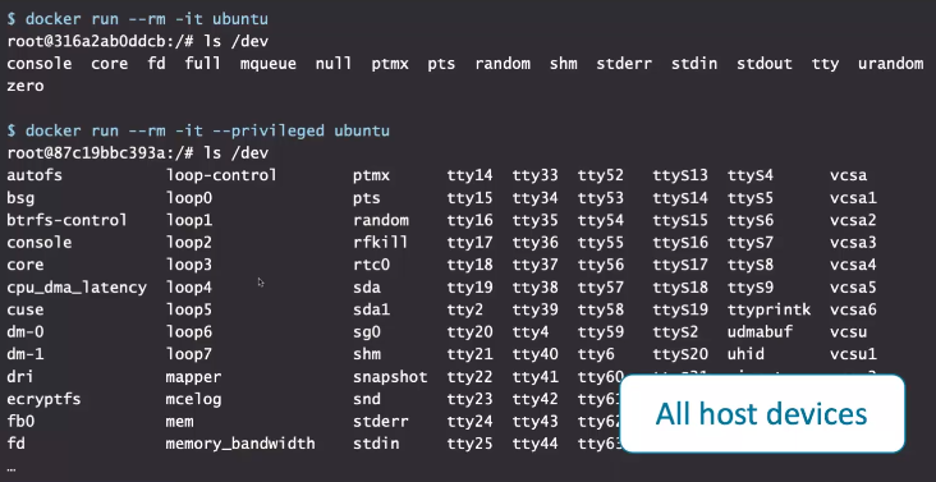

When you run the container with --privileged, in addition to all those capabilities (many of which are quite powerful and likely unnecessary), you can also now access a lot more devices (see Figure 2).

In fact, --privileged grants access to every single device on the host. With the combination of access to all capabilities and all devices, a privileged container could, for example, reformat the drives attached to the host. So, an exploitable vulnerability in a container with --privileged would allow an attacker to do very dangerous things.

Figure 2: The --privileged flag allows access to every device on the host.

4. Are you keeping your hosts up to date with the latest security releases?

"Stay up to date on all software patches" has long been salient advice for security professionals and IT systems administrators. It's a basic and necessary best practice for thwarting attackers who try to exploit known vulnerabilities. The same applies to the software on your host in Kubernetes or other cloud-native software.

Unfortunately, while it's a well-established security best practice, there’s also a long history of organizations failing to follow it. Learn from the example of the devastating 2017 WannaCry ransomware attack that impacted about 150 countries and more than 200,000 systems and resulted in an estimated $4 billion in damage. Yet, it was preventable; Microsoft had released patches to fix the Windows vulnerability almost two months before the attack.

Alarmingly, I see similar patterns playing out in cloud-native environments. For example, there are enterprise users still running on versions of Kubernetes that are two years old, exposing their organizations to enormous (and unnecessary) risk because they’re using an older version that has not been updated with recent security fixes. Kubernetes releases come out every three or four months, and only the latest three versions are maintained with security updates.

I recently provided an overview of my container security checklist for the KubeSec Enterprise Online webinar series. Because it was a live and interactive session, I was able to ask attendees' questions, including whether they're keeping hosts up to date with security releases. Here's the breakdown of their responses:

- Yes: 72%

- No: 18%

- Don’t know: 10%

While it was reassuring to see that nearly three quarters of attendees are up to date, it needs to be 100%.

If your organization falls into that minority, take the steps necessary to be able to answer yes immediately. If you are using a managed Kubernetes environment, be sure that your vendor is collecting all the security upgrades you need to address vulnerabilities and is promptly providing you with those patches.

5. Are your secrets encrypted both at rest and in transit?

Some of your application containers may need secret information, such as passwords or tokens, to do their jobs. For example, your application might need a database password. If you’re on Kubernetes, and using Kubernetes native secrets, know that, by default, Kubernetes stores those secrets unencrypted.

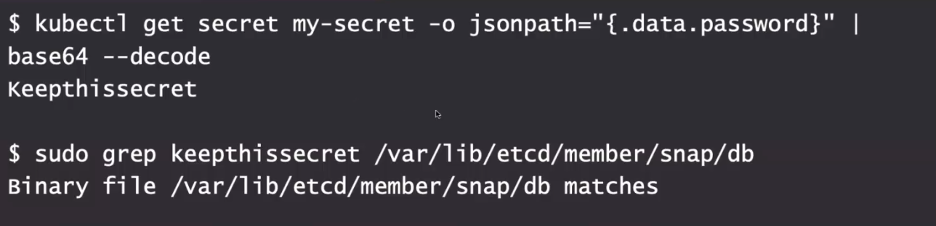

When you get a secret using kubectl, you can get the plaintext version by simple base64 decoding, as shown in Figure 3.

Figure 3: Decoding a Kubernetes secret via simple base64 decoding and in the etcd database.

This secret resource could be protected by Kubernetes RBAC, so that not everyone (or every pod) can access the secret over the API or with kubectl. But there is another way to get to this secret—looking directly into the etcd database.

If you're storing secrets natively in Kubernetes, without any additional plugins, they're going to be stored along with all your other state information in the etcd database by default. If you search for your secret value inside the database (also shown in Figure 3), you can see that the database file matches the secret value.

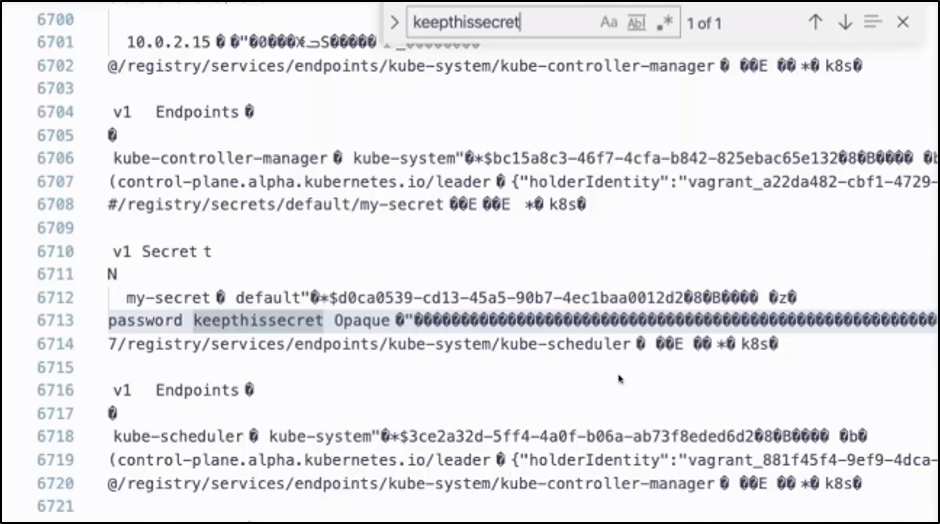

The etcd database file is a binary file, so it’s not very pretty in my editor (Figure 4) but you can see that the secret is just sitting there in plaintext. Anyone who has access to the database file on the host machine can read any of the secrets that are stored in there, because by default they are not encrypted.

Figure 4: Kubernetes secrets stored in the etcd database file are not encrypted.

You do have options, such as encrypting secrets in etcd, or using a secrets manager to hold the secret values separately outside of etcd. You might want to take advantage of the key management systems from your cloud provider to securely store your secrets. Fortunately, there are a number of Kubernetes integrations for secret storage.

Know these answers, or learn them ASAP

I recently wrote a book about container security, and the five questions in this article are just some of the 28 questions enterprises should ask themselves, found in the appendix called "The Container Security Checklist."

Again, I recommend that your answers to all five of those covered here be yes, but I realize you may have very good reasons to answer no to one or more of them. However, if you're not certain, make it a priority to come up with an answer. The security of your entire deployment depends on it.

Don't miss my keynote, "The Beginner's Guide to the CNCF Technical Oversight Committee," as well as my session, "Getting Started with Cloud-Native Security," at KubeCon+CloudNativeCon Europe 2020 Virtual on August 17, 2020.

Keep learning

The future is security as code. Find out how DevSecOps gets you there with TechBeacon's Guide. Plus: See the SANS DevSecOps survey report for key insights for practitioners.

Get up to speed fast on the state of app sec testing with TechBeacon's Guide. Plus: Get Gartner's 2021 Magic Quadrant for AST.

Get a handle on the app sec tools landscape with TechBeacon's Guide to Application Security Tools 2021.

Download the free The Forrester Wave for Static Application Security Testing. Plus: Learn how a SAST-DAST combo can boost your security in this Webinar.

Understand the five reasons why API security needs access management.

Learn how to build an app sec strategy for the next decade, and spend a day in the life of an application security developer.

Build a modern app sec foundation with TechBeacon's Guide.