Security

DFIR: The unsung hero of cybersecurity

August 08, 2025•

4 min read

Rethinking vulnerability management

August 06, 2025•

3 min read

Available now on Azure Marketplace – OpenText Core Threat Detection and Response

July 22, 2025•

3 min read

How OpenText™ DFIR tools deliver faster, deeper, and more defensible digital investigations

July 18, 2025•

4 min read

Data owners and their role in data management and security

July 17, 2025•

3 min read

7 DevSecOps best practices for modern development teams

July 16, 2025•

4 min read

Accelerate secure coding with AI and real-time developer learning

July 15, 2025•

3 min read

Digital trust is the new currency

July 14, 2025•

4 min read

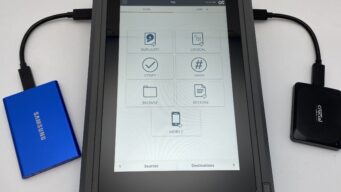

Speed, integrity, and confidence: Meet the new OpenText™ Forensic TX2 Imager

July 14, 2025•

3 min read

Secure AI development with OpenText: Protecting GenAI-enabled applications at scale

July 14, 2025•

2 min read