Every company wants its marketing videos to go viral, its special offers to be snapped up within seconds, and its customers to jump at the first chance to use its newest technology. But given the opportunity, is your e-commerce website prepared for the peak loads that accompany any major, it-could-never-happen-to-us software quality disasters?

During my tenure as a quality assurance and testing leader for several top companies, including Equifax, MyFICO, T-Mobile USA, Healthcare IT Leaders, and Run Consultants, I’ve experienced—and remedied—several large-scale software quality disasters. Though they varied in terms of what went wrong and the consequences, you can learn from my mistakes and apply all the right best practices. With proper performance engineering, the following three situations are avoidable.

Failure 1. Valentine's Day promotion knocks out wireless carrier

What went wrong: At T-Mobile, we brought in a specialized telecom performance testing team from Amdocs to conduct testing for a new release, and it identified several bottlenecks and issues under high load. After investigating the performance issues, the development team decided that the issues weren’t critical and could be fixed later. For those issues to become a problem, the team said, the site would need to receive more than 10 times projected peak load for the site. They were so wrong.

On Valentine’s Day, T-Mobile ran a “buy one, get one free” smartphone promotion. Because it was a nationwide campaign, thousands of customers searched the web for a second phone, all at the same time. Those searches created a different type of behavior than what we expected when we tested the promotion before. The site first slowed, and then crashed, and we were down for more than an hour. Stores reported similar experiences and reported them to T-Mobile's vice president of operations.

How we fixed it: After customers began giving up on purchasing through the website and in stores, our team put in place a couple of fixes and then restarted the server. When we met with the executive management team at headquarters the following week, we expected them to be upset. But instead they were happy that the promotion attracted more subscribers than expected, and they provided additional (previously requested) funding to build a better performance testing lab. The new testing environment was logically representative of production and delivered more reliable testing results. Then, in the next budget year, senior management granted a joint QA/Dev budget request for service virtualization. That allowed us to test with virtual third-party systems without actually hitting them. We were able to load test earlier in the project, before all systems were available.

Lessons learned: Don’t ignore defects found in performance testing. Have the courage to be clear about project risk, and be sure to budget for and request the investment tools and performance testing environment that are needed. Sometimes even an outage has positive consequences. We ran many promotions over the next few years without significant performance issues.

Failure 2: How Suze Orman killed a credit monitoring website

When I was at Fair Isaac, the MyFICO website received some unexpected publicity when one of our advocates, financial expert and TV host Suze Orman, went on "The Oprah Winfrey Show" and spoke about the benefits of credit monitoring. She mentioned our site, and the popular show displayed the website address on-screen.

What went wrong: Immediately following the television broadcast, traffic on the site hit unprecedented levels, visitors began watching a video, and the MyFICO site crashed.

How we fixed it: We began hosting the video content from the site with a cloud provider instead of on our own servers. In this way, we could automatically scale during peak loads.

Lesson learned: Streaming content is resource intensive, especially when peak volumes of website visitors are high. You can find both commercial and open source cloud-based testing tools that will let you easily ramp up to more than a million virtual users and test from the cloud to generate virtual load from multiple cities. Most cloud providers, including Amazon Web Services, HPE, and Google, let you choose from multiple locations from which you can install the load generators so that testers can simulate accurate load scenarios to fully execute load balancing and regional code situations. You can use this technique to vary the network and connection speed to improve the realism of your load-test scenarios.

Failure 3: 401(k) site gives it up at 40 concurrent users

When I worked for Invesco Retirement, we hired a third party to develop a web-based application for companies to process their 401(k) contributions online. The pilot worked OK, but when many customers started uploading documents and running reports at the same time, the site began performing badly, with some requests taking several minutes to load—or timing out altogether.

What went wrong: The Invesco website was unable to handle more than 40 concurrent users simultaneously. Our subsequent investigation revealed that 70% of the issues customers experienced were from suboptimal configuration and customization we had done, while the other 30% derived from inefficient queries or coding issues and a memory leak in the third-party reporting system.

How we fixed it: We used performance testing tools to diagnose the issues, actively tuning the production system after hours over a three-month period. We worked when the stock market was closed, testing the production configuration to make sure it was correct, and we were careful to set all the configurations and settings back to normal before trading hours began each day. After we fine-tuned everything, the web application's performance increased sufficiently to support the production-level load.

Lessons learned: Test and tune the production environment before you go live. Conduct code reviews, which would have caught some of the coding mistakes. Work with developers, database administrators, application subject matter experts, and operations team members to tune the system for peak performance.

Don't let this happen to you

E-commerce site crashes and time-outs still happen far too often. There are even multiple sites on the web that you can use to check where, why, and for how long a site has been down, while others assess application performance and response time. This indicates to me that failing to scale is still a real problem.

To ensure these software quality disasters don’t happen to your business website, remember these takeaways:

- Don’t cut corners when you’re performance testing. Make your performance testing environment similar to your production environment.

- Fix any issues your testers find immediately, before they can become a customer-facing problem.

- And finally, while it’s important to collaborate with business partners, sometimes they may not know what could happen because neither they nor you are in control of how many customers visit your e-commerce site. If they’re just guessing at how many people will visit for a given promotion, click your offer, play your video, or log in to access data, the risk falls back on your IT team.

Over the years, I have led hundreds of successful software releases, but it was these three big failures that helped me grow the most. So that's it; I’ve confessed my biggest software QA failures. What are yours—and what did you learn from them?

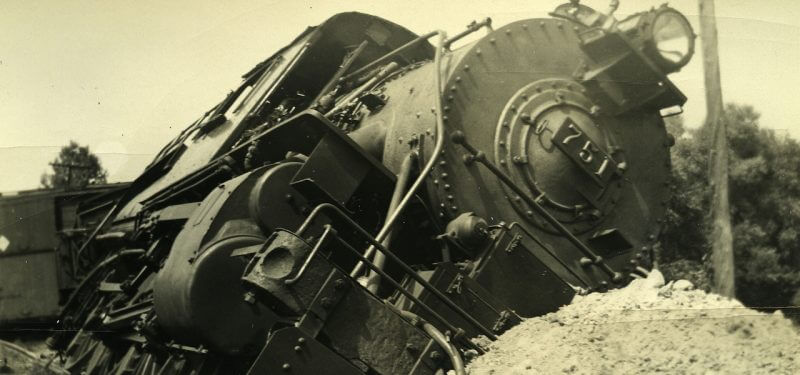

Image credit: Flickr

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.