All managers think they want containers, but few teams know what to do with them. When done thoughtfully, containerizing your services can transform the way your organization thinks about testing.

Gone are the days of maintaining x different compute environments with y different configurations. Imagine, instead, spinning up just the code you need, on the machine type it needs, for only as long as you need it. Your teams can drastically reduce cycle time while still increasing overall product quality.

But what does containerization actually mean when you have a legacy codebase and you're attempting to practice continuous integration and deployment? And what capabilities can it unlock for your testing organization?

Containerization will transform the way you think about testing your code, and what it means to perform an end-to-end test. Here's how it works.

What is a container?

In its simplest form, a container is a collection of code, a configuration, and runtime dependencies, all bundled together with an execution engine on a virtual machine, as illustrated below:

Figure 1. The four components of a container image for a service in a test environment.

Most container specifications today are on the Docker platform (though there are many different container orchestration engines, of which Docker Swarm is just one).

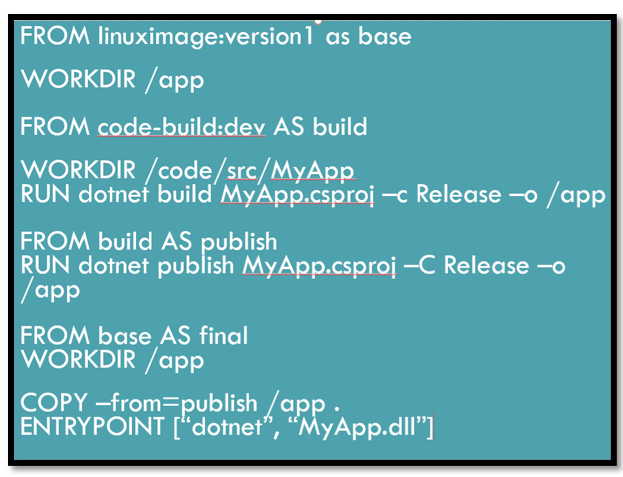

Creating a container is as simple as running a build of source control to generate an executable, bundling that with a specified machine image, and telling Docker to start. Docker then spins up the container as a guest operating system on the machine, with the specified application running and ready for use. (See Figure 2, below.)

Figure 2. This sample Dockerfile defines a container for a C# service.

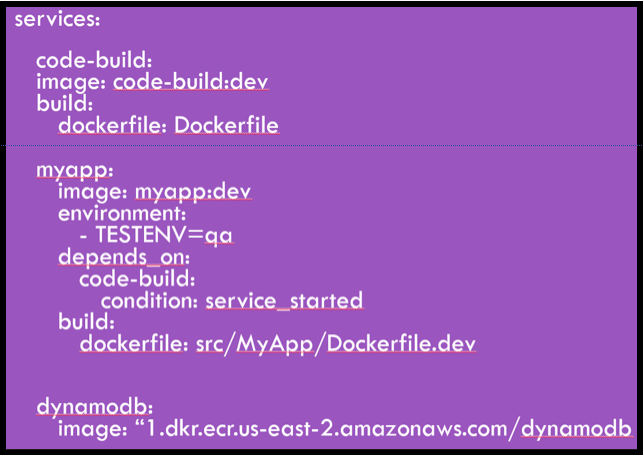

Along those lines, engineers can specify that multiple services must be composed together to make a full application. Using many Dockerfiles, they can string together as many services as necessary—each from either a build or from a trusted, published source. (See Figure 3.)

Using Docker Compose, engineers can create any sort of environment that might be needed—locally, on-demand, and with precisely the same OS version and dependencies as it would have in production.

Figure 3. This Docker Compose .yml file defines a container for a QA environment that consists of several different services.

[ Special Coverage: STAREAST Conference 2019 ]

Use containers for your test environments

As nearly every software tester has experienced, test environments are a mixed blessing. On one hand, they allow end-to-end tests that would otherwise have to be executed in production.

Without a test environment, testing teams would be shipping code that hasn't been tested across functional boundaries out to users—and hoping for the best. A well-configured and maintained test environment, one that closely mimics production and contains up-to-date code deployments, can provide a safe and sane way for testers to validate a scenario before it gets into the hands of a customer.

Problematically, however, test environments encourage a mode of development that is fast becoming outdated: long integration cycles, an untrustworthy main source trunk, and late-stage testing.

The most productive, highest-performing engineering teams do just the opposite. They need to be able to trust that code in the main trunk could go to production at any time. They often shift left on quality, with the majority of testing happening before a code change even lands. They rarely have time to spend days or weeks "integrating" changes and testing them in a QA environment.

The highest-performing teams know that the earlier in the development process a bug is found, the cheaper it is to fix—and they want to help all developers find bugs, quickly.

Also, test environments tend to be difficult to maintain, often have mixed or unique configurations, and rarely resemble their production counterparts in either operating system or dependency versions. Updating a test environment is generally at the very bottom of a QA or operations team's priority list.

The key benefits of containers

With containers, you get a well-maintained test environment without the negative implications for a team's development process. Test engineers can, on their own development machine, spin up exactly the services and dependencies that would be available in production, but with their own code deployed on top.

They can fully test this code, not just at the unit level, but at the integration level as well. They can even execute specialty tests—such as user interface visual validation or security scans—on that code, and feel confident that what they are testing is the same as what would be deployed in production.

[ Also see: No fear: How to test software in production ]

An engineer can even share access to the services with a teammate or dedicated tester, who can then provide a second set of eyes on the change before providing signoff.

Test data management, and simpler test runs

One of the most difficult tasks in maintaining a test environment is ongoing curation of the data in that environment, especially as it undergoes churn and interaction from a variety of different engineers and teams.

Because it is implicitly siloed, containerization encourages managing test data as a part of the test suite. Rather than hope that the environment has been populated with the correct data set, since the database is also a part of the container stack, a team can simply populate the database as a part of initializing a test run.

Because the created container is unique to a single change set, the test runs are simpler. Often a test suite will no longer need to account for more complex scenarios such as parallel test runs or bad starting states.

[ Also see: 3 highly effective strategies for managing test data ]

If a run fails or is suspected of corruption, an engineer can simply trash the offending container, start a fresh run, and repopulate the container with clean, uncorrupted test data.

This data-as-code has the bonus side effect of being code-reviewable, trackable, and easy to update concurrently with the tests that will utilize it. These are many of the same benefits as are found in the infrastructure-as-code configuration that enables containerization.

The benefits outweigh the risks

Containerizing existing services can seem like a daunting task, but the benefits in time saved from no longer maintaining myriad test environments or debugging issues in production far outweighs the initial investment. The earlier you can identify bugs in the development cycle and the further left you can push software testing, the more improvements you'll see in not just code quality, but in the quality of life for all your engineers as well.

Imagine a world where a tester can work in exactly the same environment as a developer, and where a bug report isn't routinely met with a shrug and a "works on my machine" dismissal. Imagine a world where a team no longer must worry about a compatibility matrix between the operating system version and the software release version.

That world is here, today, if your teams take advantage of containerization.

Want to know more? During my STAREAST conference session, "Continuous Testing Using Containers," I'll offer more tips about how get started with containers as part of your testing strategy. The conference runs April 28 to May 3, in Orlando, Florida. TechBeacon readers can save $200 on registration fees by using promo code SECM.

Can’t make it? Register for STAREAST Virtual for free to stream select presentations.

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.