To obtain golden eggs, one must care for the goose who lays them. The goose I want to highlight in this article is your CI/CD pipeline.

Because there are many sick geese in the software business, it's time to describe some common anti-patterns, their impact, and how to fix them.

Underestimating the importance of the pipeline

The CI/CD pipeline is to software development what the production line is to manufacturing. Successful manufacturers know they must optimize the production line because quality, speed, and throughput depend on it. Investments are carefully balanced between production capacity and desired outcomes.

In software development, all too often the CI/CD pipeline is treated as a second-class citizen. Building another feature is always prioritized higher than investing in the pipeline. As a result, the velocity and quality of your software may constantly deteriorate.

Instead, balance your investments between those needed to improve the pipeline and those needed for new features.

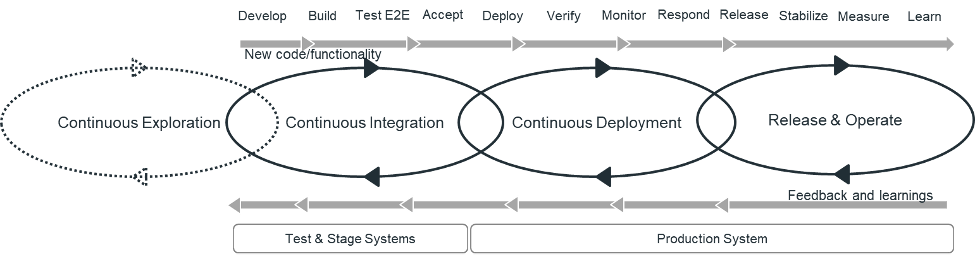

Figure 1: CI/CD pipeline extended with release and operate steps. Source: Micro Focus

Not implementing pipeline modeling

A map is useless if you don't know where you are. How do you identify the major issues in your pipeline? Many people select their improvement initiatives based on a gut feeling, whether those new projects are about implementing new technology or another tool, or just working on their favorite areas.

They are understandably proud of the improvements they have created, but their efforts result in no significant return on investment. And any improvement that does not address an existing bottleneck will very likely not have a meaningful impact on end-to-end performance.

A systematic way to identify any bottlenecks in your pipeline is to do pipeline modeling. It gives you an end-to-end view of your pipeline and helps you to discover your bottlenecks. As part of your modeling, measure lead and process times plus percentage completed and accurate. This percentage is measured for each step and then calculated for the overall end-to-end process.

Use this information to build a prioritized improvement backlog. Allocate enough capacity to constantly remove bottlenecks and measure progress by comparing the end-to-end performance with the previous state.

Letting infrastructure become a deployment bottleneck

Deployment starts during the continuous integration (CI) cycle of your pipeline, when you build your application, as well as the infrastructure it will run on and the application deployment scripts. In the early test stages, don't test just the application; test your deployment scripts as well.

The time required to deploy the infrastructure and the solution is often a bottleneck, especially when the concept of flow is not deeply anchored in staffers' minds. The longer the infrastructure setup takes, the more likely that you work in larger batch sizes and, as a consequence, with limited flow.

If infrastructure is not available on demand, or provisioning takes time due to manual efforts, change it. Invest in a private or public cloud to ensure that your infrastructure is not a bottleneck.

Setting up the DevOps pipeline manually

Setting up a CI/CD pipeline requires expertise and effort. At least in the enterprise context, this is a full-time job. This includes setup, integration, and the deployment of many tools, such as configuration management, build, static code analysis, binary repository, test, deployment, code signing, metrics, performance, and infrastructure.

After the setup, your pipeline—with all the tools, plugins, and integrations—requires maintenance: upgrading individual tools to new versions, integrating the new versions into the bigger ecosystem, troubleshooting issues, and implementing improvements. These efforts are often underestimated and understaffed.

To avoid these issues, create a pipeline as a shared service or software factory. Dedicated experts will make sure that you have a well-working pipeline and relieve the product teams from these efforts. But be cautious; don't let the service become a silo or bottleneck. A well-balanced interaction model is key to success.

Discovering and resolving defects late

How often do you find defects late in the process, and how often does this delay your release and negatively impact the quality of your solution?

Finding a defect in a later-than-necessary stage often blocks finding other defects or requires extra efforts and workarounds. Integration becomes a nightmare, and the situation suffers from long stabilization phases. This is far from continuous delivery.

Some examples include:

- A performance issue is discovered very late, because testing was blocked by a defect that crashed the system just after the tests started. The reason for this was a huge defect backlog that delayed the fix and deploy to the performance testing environment for weeks.

- Due to the low quality of a new component version, the consuming team—which depends on that new component version to do its work—considered an early integration as too much wasted effort. However, a lot of functionality required this component, and when it finally got integrated, a lot of issues were discovered late. The fixes required time and finally the whole system was delayed. The deployed batch was big and increased the deployment risk. Additional service interruptions and fixing efforts followed.

Faster feedback allows better learning and earlier defect resolution, and developers get better and make fewer mistakes (defect prevention). The fewer defects in the system, the higher the flow.

Make sure you design your pipeline for fast feedback, and immediately fix defects when they occur. This will increase your productivity and the performance of your engineers and reduce work in process.

Ignoring error types

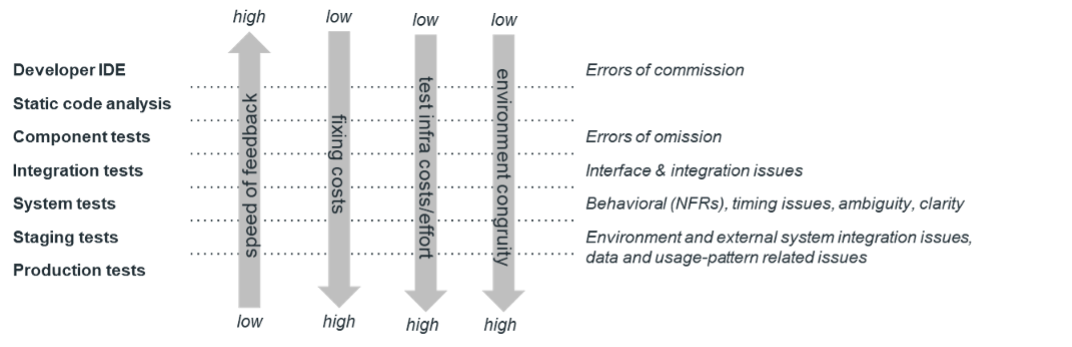

Most error types have can be found and fixed only after a certain stage. Missing that stage leads to the above-described anti-pattern of late discovery. The table below describes the characteristic of each test stage, feedback time, error types, fixing costs, test infrastructure costs/effort, and environment congruity.

Figure 2: Where to find which types of defects. Source: Micro Focus

In the developer IDE, you have the fastest possible feedback because you get it while you type, or at the latest when you compile and execute your local unit tests. Because the developer is in the topic and does not require any effort to locate the issue, fixing is fast and cheap.

The major error type you will find here is errors of commission, such as the IDE plugin for static code analysis already indicating a potential memory leak, the security scanner highlighting a potential security vulnerability, the unit tests finding some "index one off" issue, and so on.

Static code analysis during build time can find the same issues as the IDE plugin, but generally does so later. Found issues pile up in a report, need to be assigned to the right developer, and therefore take longer to fix. Due to the long list of potential issues, together with time pressure and process overhead, the fixes are often not made at all.

It makes sense to measure and run static code analysis during the build to confirm code cleanliness, but for developer feedback and productivity, IDE-based solutions are better.

Errors of omission are things the developer has missed for implementation. Because you don't know what you don't know, these are not found during unit testing but can be often found during component testing; the focus here is to test if the component does what it should. Test-driven development (TDD) tries to apply this perspective during unit testing and can help to simulate the consumer perspective earlier in the process.

Integration tests can find interface issues and any kind of dynamic issues that occur only at runtime. Up to this stage, testing focuses on smaller parts of the system, feedback is fast, and test environment costs are low.

With system tests, cost considerations start in earnest. For these tests, you need the "whole" environment—systems, integrated systems, setup, and installation of the software. This requires a full setup and deployment of the often-large environment.

System tests are based on the full system; however, there might be some technical or economical constraints that are forced to work with stubbed external integrations or downsized infrastructure.

Nevertheless, this is the first time that real end-user functionality can be tested. This again uncovers errors of omission, including missing functions or missing parts of the specified functionality. Also, system testing is the first time you can experience behavioral issues, such as crashes or errors due to problematic runtime interactions or network issues between parts of the system.

Testing from the perspective of the end user is also the first time you can discover misunderstood implementations of functionality due to ambiguity and lack of clarity in the specification or to misunderstood requirements from the product owner or product manager.

For its part, staging verifies two things: whether the (hopefully) automatic deployment works, and whether production-like infrastructure and external system integrations work as expected. Environment congruity ensures that any issues related to networking, firewall, storage, security policies, high availability, performance etc. are found before they cause trouble in production.

Production is the latest possible stage in your pipeline; therefore, feedback is delayed to the maximum possible time. At this stage, locating, debugging, reproducing, and fixing the issue is time consuming and therefore expensive. However, some environment-specific defects may occur only here, as the lack of environment congruity—the grade of identical setup—in the earlier stages does not cover the production scenario. The same is true for unknown usage patterns and production data-related issues.

Analyze where you currently find which kinds of defects. It will provide you the information to optimize your testing strategy and to speed up feedback and learning, decrease costly rework, and finally increase quality, flow, and throughput in your pipeline.

Missing an explicit deployment strategy

For continuous flow, the automated deployment of new code and infrastructure is key to success. Any reliability issues in this area—from a lack of automation or error-prone deployment code—inhibit flow, delay feedback, and lead to larger batch sizes. Therefore, you need a clear deployment strategy.

Developing your deployment scripts and configurations is a parallel activity to your application development and follows the same rules, such as using configuration management and architecting for flow and testability, robustness, and easy debugging capabilities. If required, also optimize the speed of your deployment.

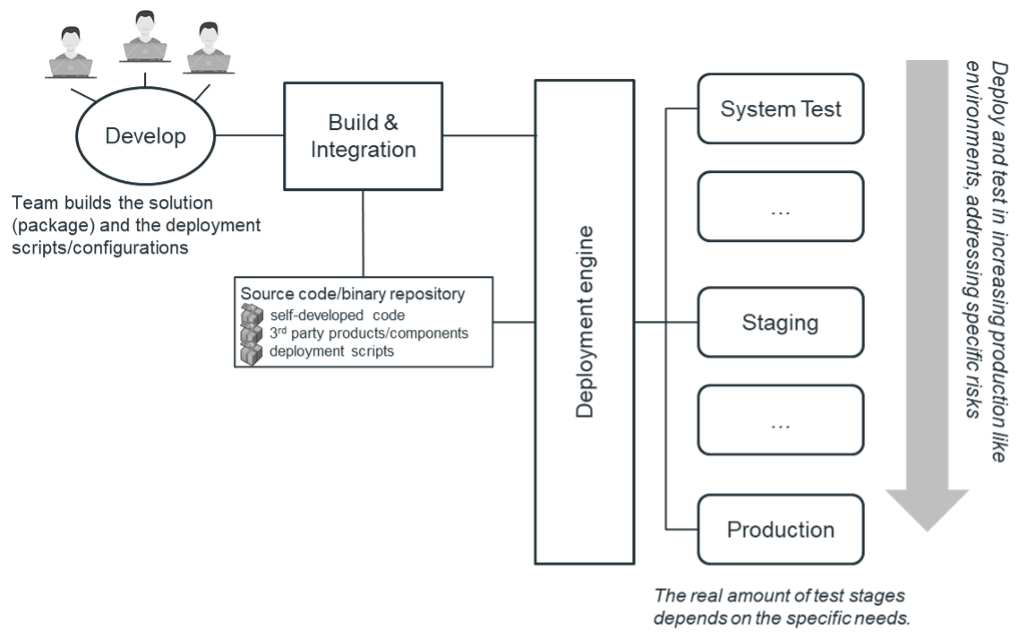

As described in the graphic below, application code is developed and packaged. The packages are then deployed to the system test environment. The purpose of this stage is to make sure that the deployment scripts work reliably and that application functionality is validated.

Figure 3: Deployment and test stages/systems. Source: Micro Focus

As soon as you have a good-enough version, deploy to staging to check if the solution also works in this "as congruent as possible to production" staging system. Also, add all tests that were not possible to be executed in the system test environment because of the lack of production-like data or missing infrastructure such as stand-by databases for high-availability setups.

You might need to deploy some other environments for specific tests such as performance, stress, internationalization, continuous hours of operations, and disaster recovery.

When you finally deploy to production, you should have high confidence that all relevant issues are solved. Failing in staging is still okay, but failing in production is a different story.

The less the similarity between staging and production, the higher the risk that you generate service disruptions and outages. This is especially true in cases where your deployment scripts need to deviate between staging and production. Carefully balance your investments into a congruent staging infrastructure/environment.

Not separating deploy and release

Many people do not distinguish between deploy and release and therefore miss a key advantage. Deployment is just bringing new code to the production system to check compatibility with existing code, without exposing the new functionality to end users. Releasing means to expose the new functionality to the users.

With the right technical skills, this is not an all-or-nothing approach but can be a gradual exposure of functionality to dedicated users.

A possible scenario could look as follows:

- Code is deployed to production.

- If it is successfully deployed, new features are turned on just for some testers. They can do required testing without impacting the real users. Meanwhile upgrade your telemetry and check for any anomalies.

- If everything works as expected, expose functionality to real users. Start small and, if everything is okay, expose to larger user groups until all users have access.

At any stage, if there are negative side effects, roll back or fix forward immediately.

Making deploy and release the same increases the risk of service interruptions and quality issues. Separate deploy from release to avoid this.

Not making a conscious decision about your delivery model

Some conditions make it hard to work with small batch sizes and negate the benefits of a well-working flow-based system. This might be a trigger to rethink your delivery model.

Here are some examples of conditions that can do this.

Scenario A

An on-prem product that succeeded on the market for years has many customers and a huge support matrix. However, that support matrix requires a lot of time and capacity to test different environments, which piles up work and leads to larger batch sizes and longer stabilization phases.

Customer satisfaction is suboptimal, with users finding bugs in their specific on-premises installations. This leads to an increased maintenance effort and reduces available capacity. This lost capacity can't be used to invest in new, innovative features or additional solutions.

The SaaS competition is catching up. In this case, a switch to a SaaS-based system with a continuous delivery model could free up the required capacity to increase customer satisfaction and increase market share.

Scenario B

A company consumes a commercial off-the-shelf (COTS) product that releases every six to 18 months. Consuming new versions is a risky and expensive three-to-six-month project because of required customizations and integrations into the existing environment.

To avoid the risks and costs of such an upgrade, the company left out a couple of releases. It is not receiving new functionality but still needs to somehow deal with issues. The provider must support many old versions and has to make extra effort for providing the required patches and hotfixes.

This is a lose-lose situation. Replacing the COTS solution with a SaaS solution could solve the problem. The frequent updates reduce the risk and effort to integrate it into the customer environment. The customer can decide about the timing of the upgrade to test and prepare the rollout. The SaaS provider should also be clear about the backward compatibility of the solution.

Not having a shift-left focus

The later you perform certain activities, the later that new functionality is ready to be released. This becomes obvious when there is a several-weeks-long hardening phase for each release. The longer the hardening phase, the more potential to shift activities left.

To understand what you can shift left, just look at the activities you perform in the hardening phase and figure out how they can be done earlier. Manifest the activities in the definition of done (DoD) as exit criteria of a stage in your story or feature Kanban system.

It's time to optimize your pipeline

Take care of your golden goose: Optimize your DevOps pipeline to achieve the maximum benefit from your software development. Always think about flow and continuously remove bottlenecks. Automate everything; in the mid to long term it will pay off in terms of increased flow, faster feedback, and better quality.

Use your test stages wisely and find defects as soon as possible. Remember, you can't scale crappy code.

And be conscious about your delivery paradigm. Can you win in the long term against your competition? Shift activities left, and reduce and eliminate hardening times. Keep these things in mind, and the golden eggs are yours.

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.