Data deduplication technology has been around for a long time but has undergone a bit of a resurgence lately as more storage vendors added this feature to their hardware and software products. But simply having a data deduplication feature doesn't mean you'll use it well. Even many experienced storage administrators and architects labor under several common misconceptions.

Whether you are a system architect, planning staff, procurement person, or IT operations staff, and whether your data is on primary disk storage, archival storage, or all-flash storage arrays, you need to understand the basics—and pitfalls—of deduplication schemes.

Data reduction ratios: Your mileage may vary

While deduplication is available for primary and secondary storage, the data footprint reduction ratios you can achieve differ greatly. People frequently fall into the trap of assuming that what they can achieve on a deduplication storage system is the same as what they can get on a primary array.

Deduplication is automatic. But the potential data reduction ratios you can achieve differ. For example, if you need to store 100TB of data, it makes a huge difference if you assume a 10:1 ratio and buy a 10TB device or if you assume a 2:1 ratio and buy a 50TB device instead. You must have a good idea as to what's achievable before you buy.

Having spent an extensive amount of time designing backup environments and working on deduplication on primary arrays, I have come across many misunderstandings about proper use. If you are using the technology in your environment or are involved in architecture designs and sizing that include deduplication technology, this article is for you. Understanding the eight deadly pitfalls below will help you more confidently deal with deduplication-related questions and better estimate what a realistic ratio should be for your environment.

1. Higher deduplication ratios yield proportionally larger data reduction benefits

If one vendor promises a 50:1 deduplication ratio, is that five times better than another vendor's 10:1 claim? Deduplication is all about reducing your capacity requirements, what are the potential capacity savings? A 10:1 ratio yields a 90% reduction in size, while a 50:1 ratio bumps that up to 98%. But that's only a 10% difference.

In general, the higher the deduplication numbers go, the lower the data reduction benefits—the law of diminishing returns.

2. There is a clear definition for the term “deduplication”

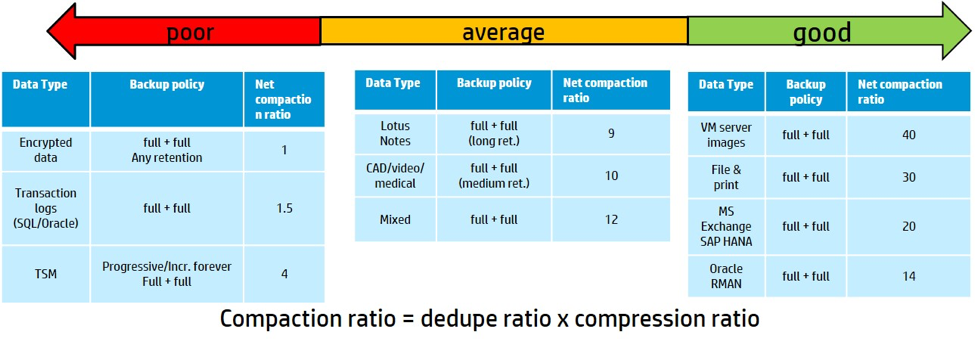

Deduplication is about reducing the amount of data stored by removing duplicate data items from the data store. This can occur on an object/file or physical data block level, or it can be application- or content-aware. Most products combine deduplication with data compression to further reduce the data footprint. While some vendors combine the two, others call them out separately or coin terms such as “compaction,” which is just a fancy way of saying "deduplication with compression." Unfortunately, there is no single, all-encompassing, widely accepted definition of deduplication.

3. Deduplication ratios on primary storage are similar to those achievable on backup appliances

Storage vendors use many different deduplication algorithms. Some are more CPU-intensive and sophisticated than others. It should come as no surprise, then, that deduplication ratios differ widely.

However, the biggest factor affecting the deduplication ratio you'll achieve is how much data you have that's identical or of a similar type. For that reason, backup devices, which hold multiple copies of the same data in weekly backups, almost always show higher deduplication ratios than do primary arrays. You might keep multiple copies of data on your primary arrays, but as these snapshots tend to be space-efficient, the arrays will inherently implement a kind of deduplication. That's why primary storage deduplication ratios of 5:1 are about as good as it gets, while backup appliances can achieve 20:1 or even 40:1, depending on how many copies you keep.

4. All data types are equal

As should be clear by now, this is patently false. For example, data types that contain repetitive patterns within the data stream lend themselves to deduplication. The deduplication ratio you can achieve depends on several factors:

- Data type—Pre-compressed, encrypted, meta-data rich data types show lower deduplication values.

- Data change rate—The higher the daily change rate, the lower the deduplication ratio. This is especially true for purpose-built backup appliances (PBBAs).

- Retention period—The longer the retention, the more copies you'll have on your PBBA, raising your deduplication ratio.

- Backup policy—A daily full backup strategy, as opposed to incremental or differential one, will yield higher deduplication ratios because much of the data is redundant.

The table below provides a rough overview of data compaction ratios. What deduplication ratios can realistically be expected on a PBBA? Remember that ratios on primary storage will be considerably lower.

5. Grouping dissimilar data types increases your deduplication ratios

In theory, if you mix different data tapes into a huge deduplication pool, the likelihood of finding identical blocks, or objects, should increase. However, the probability of that happening remains low between dissimilar data types, such as databases and Exchange email. So increasing your deduplication pool comes at the cost of more complex and time-consuming hash comparisons and the like. You're better off separating deduplication pools by data type. Of course, going wide within a given data type can give you a substantial increase in deduplication ratios.

For example, if you perform deduplication within a single virtual machine (VM) image, you will get one ratio, but if you target multiple copies of the same VM image (e.g., by performing daily backups of that VM to a deduplication store), your ratio will increase. Combine 50 VMs into the same store and, since those VM images are likely to be very similar, you'll improve your ratio even further. The wider you can go with your deduplication pool within a single data type, the better.

6. Your first backup will show your predicted deduplication ratio

This misconception comes up in discussions of relative deduplication ratios on primary storage versus backup appliances. If you hold one copy of the data for a given application, or virtual machine or the like, you'll see some deduplication and compression. But your ratio will only soar when you keep multiple copies of very similar data, such as multiple backups of the same database.

The figure below shows a very typical deduplication curve. This one is for an SAP HANA environment, but most application data follows the same curve. Your initial copy, or backup, shows some deduplication benefits, but most of the savings are due to data compression. As you retain more copies, however, your deduplication ratio for the overall store will increase, as shown by the blue line. The ratio for an individual backup (orange line) skyrockets starting with the second copy.

7. You can't increase deduplication ratios

It would be naive to believe that there is no way to artificially boost deduplication ratios. If your goal is to achieve the highest possible ratio, then store as many copies of your data as possible (long retention times). Your actual stored capacity on disk will increase as well, but your ratio will soar.

Changing your backup policy works as well, as shown in the real-world example below, which compares daily full backups with weekly backups combined with either daily incremental or daily differential backups. In this case, a daily full backup policy drives the highest ratio. However, the actual space used on disk is similar with all three approaches. So be wary when a storage vendor promises extremely high deduplication ratios, since a change in your backup schedule might be required to achieve it.

Backup schedule | Logical backup data written | Dedup ratio store once | Stored physical capacity | Resulting dedup ratio (logical vs. physical) |

Daily full | 30x 10TB = 300TB | 38:1 | 8TB | 38:1 |

Weekly full, daily incremental | 4x 10TB = 40TB | 15:1 | 11TB | 6:1 |

Weekly full, daily differential | 4x 10TB = 40TB | 15:1 | 10TB | 13:1 |

8. There is no way to predetermine deduplication ratios

Every environment is different, so it's hard to accurately predict real-world deduplication ratios . However, vendors do offer primary storage/backup assessment tools that are slim to run and provide insights into data types, retention periods, and the like. These tools typically allow a somewhat accurate prediction of achievable deduplication ratios.

Also, vendors have information about the ratios their installed base has achieved, and they can even break that down by industry segment. While there's no guarantee that you'll see the same benefits, it should provide some piece of mind. And if piece of mind isn't enough, ask the vendor for a guarantee. Some vendors do offer deduplication guarantees under some circumstances.

Finally, a proof-of-concept conducted on a representative subset of your data will provide even more accurate estimates.

Gentlemen, start your deduping

There is no magic behind deduplication, but now that you understand the basics, you should be well equipped to maximize the effectiveness of deduplication technology on your storage arrays and appliances.

What sorts of ratios have you achieved on your data?

Keep learning

Get up to speed fast with TechBeacon's guide to the modern data warehouse.

Download the Buyer's Guide to Data Warehousing in the Cloud.

Get up to speed on digital transformation with TechBeacon's Guide.

How important is digital transformation to your org? Take our survey and find out how you stand next to the competition.

Thinking of making a change? TechBeacon's Careers Topic Center provides expert advice to prepare you your next career move.