In an age of universal connectivity, delivering better applications more quickly and at lower cost is a strategic imperative for almost every company. Predictive analytics is emerging as a powerful tool to meet all three goals.

This subset of analytics uses statistical algorithms to identify patterns in current data, forecast future events, and suggest actions that might influence those events. Businesses use it to schedule preventive maintenance on expensive equipment, fine-tune inventory flows to match demand, and target special treatment for hospital patients most likely to develop complications and require costly return visits.

Emerging tools and techniques are applying predictive analytics techniques to the development and delivery of software. Called "predictive delivery," such analysis and resulting action can provide more accurate estimates of the time, effort, and cost associated with software development. It can help developers and development managers understand whether teams can meet their deadlines, whether testers are checking the process flows users are most likely to execute, and whether the business is delivering the features users care most about. Combined with automation, predictive delivery can also reduce testing costs even as the number and complexity of applications grows.

Benefits will occur in three areas:

- Predictive planning

- Predictive DevOps

- Predictive quality and testing

Predictive planning

Developers and their managers often underestimate how much time, effort, and money it will take to deliver a set amount of code. They'll often run into the same delivery problems, year after year, with the same staff working on similar projects. Predictive analytics can help organizations understand these repetitive mistakes, which often result in code that is late, fails to meet requirements, or is buggy.

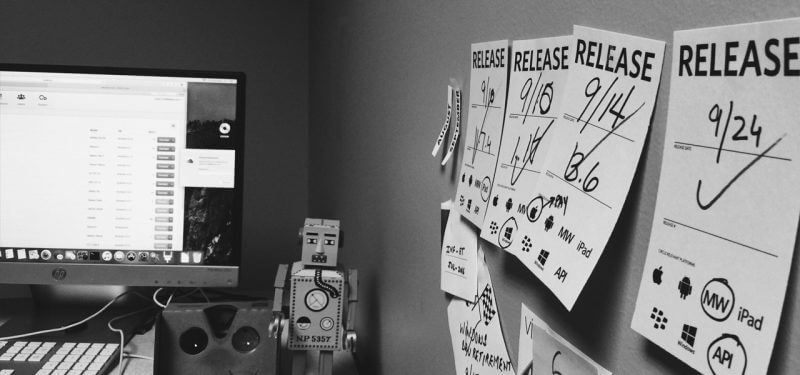

By factoring the number of lines of code delivered by various developers and the time it took them to write it in the past, managers can better predict whether various teams will meet scheduled release dates and how adding (or removing) certain players from the team might affect the delivery of code. Analyzing usage data from applications in production can help identify which functions are most popular with users, or yield the most revenue, and thus should be prioritized if teams run short of time in a delivery sprint.

Predictive DevOps

DevOps, the merging of application development and operations, is designed to speed new applications to market. As data from the production environment flows back to developers, predictive analytics can help pinpoint which coding practices are leading to an unsatisfactory user experience in the field.

Analyzing data regarding current application usage and failure patterns can help predict which features, or sequence of user actions, are likely to trigger crashes and thus need to be fixed in future releases.

Predictive quality and testing

As users interact with different parts of the application in different ways, they execute the code in different sequences, with any particular sequence potentially triggering a failure. Different users (say, those tapping map data for geographic locations) will require data from separate sources, making the links to the required databases another potential source of trouble.

Rather than attempt to test every possible combination of user actions and the required interfaces with other systems, predictive analytics identifies the most common paths users take through the application and where the application is failing, and use that data to focus testing on the most troublesome areas. Predictive analytics can fine-tune testing efforts further by using tiling algorithms that measure the similarities among all the user execution flows, finding and focusing on the overlaps that indicate the most common execution paths.

Analyzing past test data, along with current production data, can also help determine what types of tests to run on code, which parts of an application are most at risk of defects, and how long it will take to fix a certain set of defects. It can also identify areas where new tests are needed to cover areas of high customer use that are not now being tested.

Finally, predictive analytics can help you perform faster, more thorough root-cause analysis of failed tests. This requires gathering complete data on exactly where and how the failure took place. Analytics then compares all the parameters of every test that passed with those that failed, highlighting the differences such as a different operating system, device type, or supporting resources such as a database. By focusing on the differences between the successful and the failed tests, developers can much more quickly troubleshoot and resolve problems.

Getting started

Predictive delivery, like any other analytics process, relies on data. That's why it's so important to save the massive amounts of data created throughout the software development life cycle. This includes log files, results and reports from software tests, as well as data from system and application monitors. It also includes information about updated requirements and user stories from project management applications, as well as data about each build generated by compilers.

While it may seem counterintuitive at a time when companies are struggling to meet rising storage needs, predictive delivery is one area where keeping data is worthwhile, even if it's unclear whether or when that data will be needed. Tiered storage, in which seldom-used data is moved to slower but less expensive commodity disk or tape, as well as cloud storage options are making it increasingly less expensive to store very large quantities of data. When a little-noticed feature in an application suddenly becomes wildly popular, the real-world usage data will be available for troubleshooting efforts to resolve problems with it.

Training your developers and business users in big data techniques is another worthwhile investment. Even if they don't become full-fledged data scientists, just the knowledge of what insights predictive analytics can deliver will empower them to ask new questions.

Most important, don't underestimate the power of predictive delivery to reduce the cost and time required to deliver critical applications to market. In the next several years, analytic tools will mature, analytics training will become more widely available, and storage costs will continue to fall. Laying the groundwork now for predictive delivery can help you be among the first to reap these benefits.

Keep learning

Choose the right ESM tool for your needs. Get up to speed with the our Buyer's Guide to Enterprise Service Management Tools

What will the next generation of enterprise service management tools look like? TechBeacon's Guide to Optimizing Enterprise Service Management offers the insights.

Discover more about IT Operations Monitoring with TechBeacon's Guide.

What's the best way to get your robotic process automation project off the ground? Find out how to choose the right tools—and the right project.

Ready to advance up the IT career ladder? TechBeacon's Careers Topic Center provides expert advice you need to prepare for your next move.